March 18, 2025

From Simulated Cognition to Digital Twins – Making Product Design More Human-Centered

Since May 2023, the Junior Research Groups at ScaDS.AI Dresden/Leipzig have been researching innovative AI solutions that go beyond the traditional scope of our institute. From personalised coaching programs for students to human-centered automated mobility and advanced data analysis methods for ML systems, these young research groups bring new perspectives to the fields of AI and data science.

In this podcast series, we introduce each group and their projects. The researchers themselves provide insights into their work and discuss the future perspectives of their projects. All contributions are available as audio podcast (in German only) and as text (in German and English).

Our modern world is poorly designed. Well, that might be a bit of an exaggeration, but in many areas of our daily lives we encounter systems, programs or interfaces that make us feel as if we were never considered in the design process. Switches are too far apart, input fields are unintuitive, or it just takes too long to navigate from setting A to function B.

Product development is a long and extremely expensive process. User studies are essential for fine-tuning design and functionality to human needs, but they are often too expensive and complex to conduct on a large scale – especially for small development teams. This creates a problem.

Hanna Bussmann: Most of the time, only the human average is tested. People who don’t fit that average may be excluded, unable to use the device properly or even at greater risk of injury.

Hanna Bussmann is a bioinformatics student at the University of Leipzig. She is currently writing her master thesis at ScaDS.AI, specifically in the CIAO research group.

Computational Interaction and Mobility, CIAO for short, is one of the junior research groups at ScaDS.AI. Its aim is to use machine learning to make design and product development processes more human-centered. Dr Patrick Ebel is the groups leader.

Patrick Ebel: We build user models, computational models that analyze and predict user behavior to improve interfaces. These interfaces can range from smartphone UIs to in-car infotainment systems.

At the heart of the research is the detailed simulation of human user behavior. A digital system that simulates every conceivable and likely user interaction can nearly fully digitize user studies. This not only speeds up the design process and reduces costs but also allows small development teams to test their products at scale – even when they lack the capacity for extensive user studies.

But, as is often the case, this is easier said than done. Human behavior is highly complex – even in seemingly simple tasks like using a smartphone app. CIAO’s research follows two main approaches: data-driven modelling and reinforcement learning modelling. Data-driven modelling relies on large sets of usage data from which machine learning identifies patterns.

Two Approaches: Data-driven Modelling…

Patrick Ebel: Models can be data-driven, meaning they are based on recorded usage data: where people click, what apps they open, what features they use and how long they take. If we have large data sets, we can infer interaction behaviour from them. And if the dataset is large enough, we can optimise for a particular metric – such as accuracy – to create a model that interacts in a human-like way.

Of course, there are drawbacks to this approach. First, we need huge amounts of training data. Second, the behavior of the model is not always fully explainable

…and Reinforcement Learning

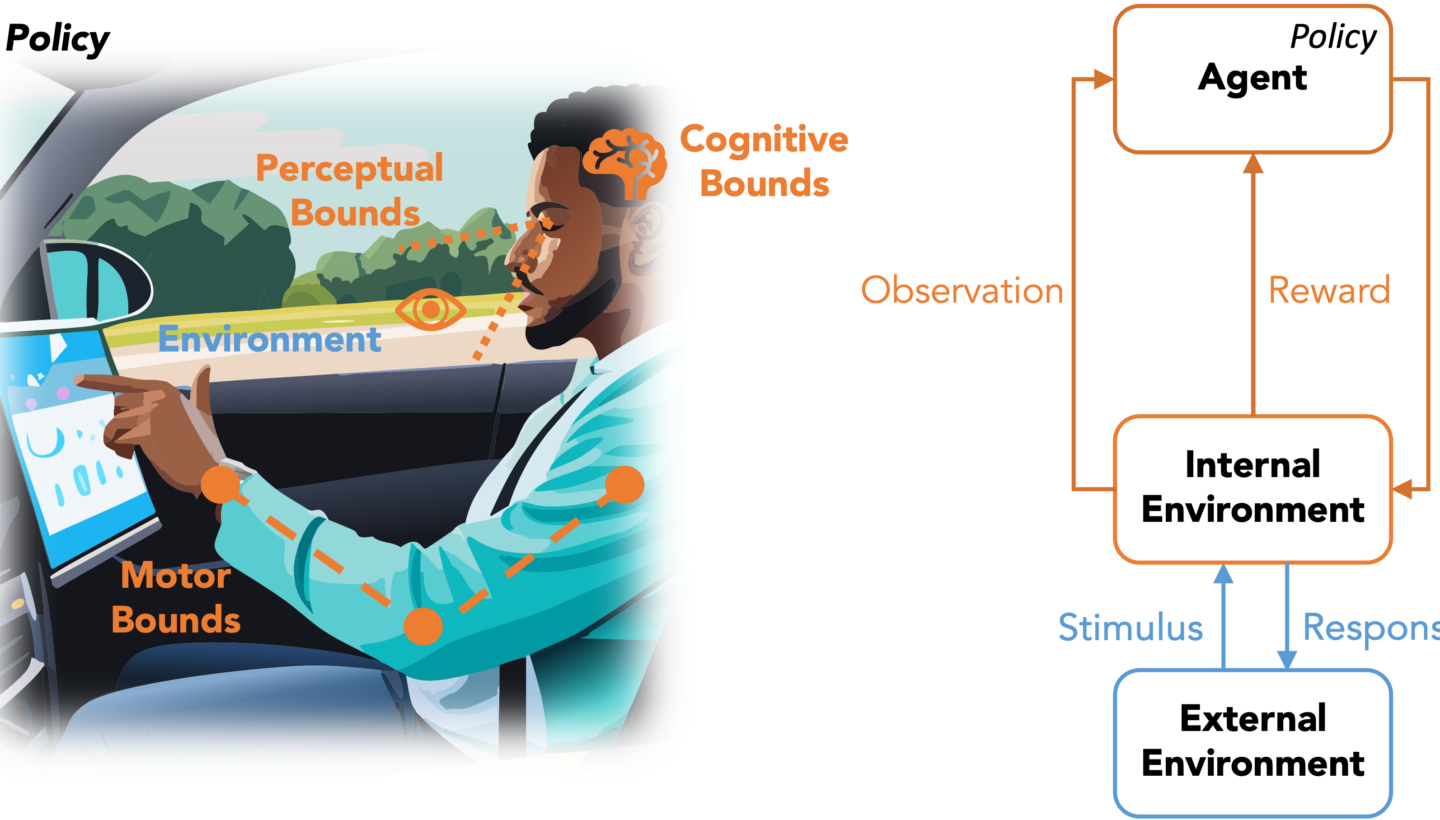

The second approach, reinforcement learning, works differently. Instead of predicting behavior based on existing usage data, it simulates users from scratch. The idea is to create a digital agent that, with no prior knowledge, attempts to interact with a new product as a human would. This concept roots itself in computational rationality. Computational rationality is a theory that explains human behavior as the outcome of optimal choices made with limited cognitive and physical resources.

Humans face imperfections. We become distracted, struggle to read small text, and often react too slowly — like watching a sandwich fall instead of catching it. Our cognitive, motor, and sensory resources have limits. To function effectively, we adapt to our environment and act efficiently.

Digital systems, on the other hand, operate in a fundamentally different way. Most of the time they perform tasks as quickly and accurately as possible. They do not experience limitations such as selective attention or distraction. However, if digital agents are to simulate human behavior, they need to perform tasks not perfectly, but in a way that mimics human limitations – that is, slower and with errors. This requires the imposition of artificial constraints.

Patrick Ebel: We want to develop agents with deliberately imposed boundaries. A reinforcement learning agent will typically perform superhumanly when given a task. But our goal is not to achieve superhuman performance – it is to achieve human-like behavior.

Take driving as an example. A conventional AI agent would use a camera that captures the entire field of view in sharp detail and accurately tracks all objects. Humans, however, can only see a small area clearly at any one time and need to actively explore their surroundings.

So we give our reinforcement learning agents an eye model that limits their perception to what a human would see. We base this on cognitive and experimental psychology. For example, how long it takes a person to move their gaze from one point to another. By setting these ‘boundaries’ and optimizing tasks accordingly, we aim to replicate realistic human behavior.

Computational Rationality und Boundaries

CIAO’s development of reinforcement learning agents draws on cognitive and experimental psychology as well as biomechanics. Even for something as simple as tapping on a screen, there are three key factors to consider:

- Perception – how quickly can we shift our attention to a new stimulus? How small can symbols and text be and still be readable?

- Cognition – how many stimuli can we process at once? How much can our short-term memory hold? How quickly can we react?

- Motor skills – How fast can we move our arm? How long and precise are our fingers? How flexible is our wrist?

Modeling all three aspects enables the construction of a digital twin based on cognitive psychology experiments. After defining the digital twin’s key characteristics, researchers can easily adjust them using ‘boundaries’. This allows simulations to represent a diverse range of human users, not just the average.

Hanna Bussmann: I can lengthen fingers, enlarge the palm, or simulate conditions like muscular disorders. I can then test how well an interface accommodates people with different characteristics.

Patrick Ebel: If I evaluate the ergonomics of a smartphone by inviting ten people, they are likely to fall within a certain height range. It’s unlikely that someone 1.51m or 2.10m tall will be included. This is where reinforcement learning models come in, because they don’t rely on real-world usage data. Instead, we can adjust the models and their boundaries – such as hand size – and observe how interactions change. Computational rational models are particularly valuable for this purpose.

The Advantages of Reinforcement Learning

Is an app or a driver assistance system really usable for people with smaller hands or weaker eyesight? Reinforcement learning models can help answer this question. Since this approach does not rely on existing data, designers can use it to test a wide range of simulated human impairments. Reinforcement learning models can also be used very flexibly.

Patrick Ebel: For example, if we collect data from iOS users, we have to ask whether it generalizes to Android systems. It probably doesn’t. However, with a computational framework, the agent learns to perceive and interact with interfaces in general. This means that we can train it on both iOS and Android and use it for general evaluation.

Ideally, we also wouldn’t need to constantly consult experts. Instead, the model could be integrated into design tools like Figma and provide real-time feedback: “The like button is too small, make it bigger,” or “Use softer colors for a more aesthetic design“.

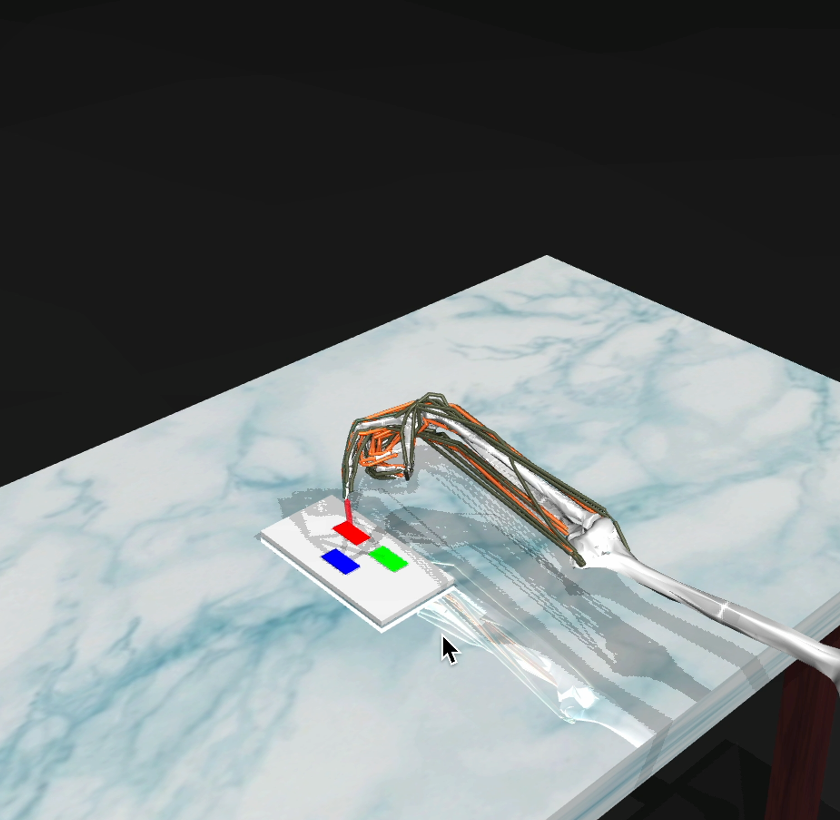

It will still be some time before CIAO reaches this level. Modeling a human stimulus-response system from the ground up requires not only enormous computing power, but also meticulous attention to detail. Hannah Bussmann’s master’s thesis, for example, examines how to simulate the biomechanics of typing. Her model must account for more than 60 different muscles, each with different strengths and ranges, just to simulate the movement of a single finger across a keyboard. More complex tasks, such as driving a car, add even more layers of difficulty.

Patrick Ebel: Of course, there are still challenges—such as the fact that prediction accuracy is far from the desired level or that large amounts of training data are required. Additionally, reinforcement learning agents often behave unpredictably or react sensitively to changes. There is still a lot of work to be done.

Chances and Current Research

Initially, the research group focused on improving mobility and user interfaces in vehicles. Today, the researchers apply the basic idea of simulating human behavior in several domains, with three ongoing Ph.D. projects, two master’s theses, and various other internal research initiatives.

Current reinforcement learning research topics include biomechanical modeling of the human arm, situational awareness modeling, and video game interaction modeling on an old Atari console. In addition, data-driven projects focus on analyzing in-car infotainment systems and developing a generative agent capable of simulating an entire city’s traffic. The group is also working on a VR-based driving simulator to conduct experiments to optimize the digital twin.

The field in which CIAO, Patrick Ebel, and Hanna Bussman are working is vast, and its potential is immense. When we think about how to optimize our digitized society with all its screens and user interfaces, it’s not just about comfort – it’s about accessibility, efficiency and safety.

Understanding human behavior and translating it into digital models remains a challenge, but the Junior Research Group’s research shows that human-centered design with machine learning is not only possible but paves the way for a better-designed future.