April 23, 2025

Is ChatGPT a security risk for smart homes?

The spotlight and IT security – Victor Jüttner is a man of many talents. A doctoral candidate at ScaDS.AI Dresden/Leipzig since 2023, he conducts research in the field of data protection and digital security under Prof. Erik Buchmann. Specifically, he investigates the chances and risks posed by ChatGPT to the security of smart home systems. Can language models infer private life from this data? Beyond his research, he performs at science slams and demonstrates how voice assistants can also contribute to cybersecurity – as he did at this year’s National Conference on IT Security Research 2025.

Smart homes – convenience with risks

A fridge that knows when you’re out of oat milk, a thermostat that knows the temperature you want in the morning, and a hallway light that turns on naturally when you get home: smart homes are almost synonymous with modern living.

According to a study by industry association Bitkom, up to 46 percent of households in Germany now use smart home technologies for things like lighting control, heating regulation or security cameras. But what makes everyday life easier also collects data – huge amounts of data that paint a picture of the household. If this data were to be stolen, for example by a hacker, could modern data analytics be used to reconstruct an entire private life? AI language models such as ChatGPT make complex data analysis methods more accessible than ever before, so the question of security risks is obvious.

In the study “ChatAnalysis Revisited: Can ChatGPT Undermine Privacy in Smart Homes with Data Analysis?”, recently published in the journal i-com, Jüttner examines how well ChatGPT can recognize behavior patterns from smart home data using simple prompts – and how low the threshold for potential attacks already is.

Smart home data analysis with ChatGPT

Large language models such as ChatGPT make it easier to work with large amounts of data in many areas, such as programming, data analysis or machine learning. However, this accessibility to data analysis tools can also be problematic. For example, attackers can more easily obtain detailed information about people’s private lives from stolen data – or so many fear.

Research widely recognise that analysing sensor data from smart lights, radiator thermostats, or motion sensors can reveal people’s behaviour. The question is how quickly, how well and how easily ChatGPT can perform such analysis, and then recognise and interpret behavioural patterns from smart home data.

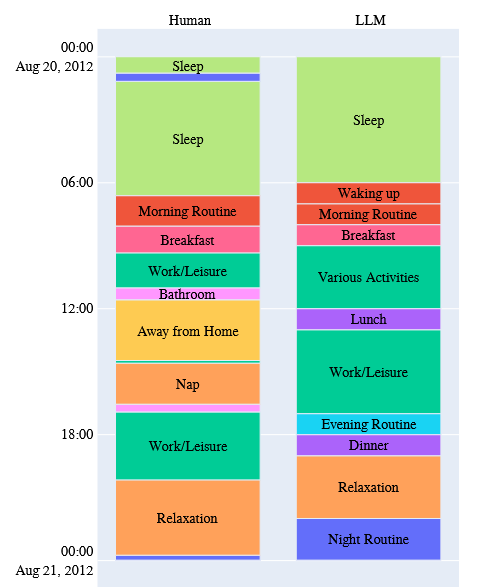

Contrary to expectations, however, ChatGPT’s results in the study were rather poor. The speech assistant was fed data from the CASAS datasets, which are widely used in behavioural research and contain a wealth of information from smart home devices. The Reasearcher tasked the ChatGPT-4 and 4o models to reconstruct the behaviour of the occupants of a house, using simple prompts. Although the systems demonstrated familiarity with all the techniques required for such an investigation, they failed to implement them. ChatGPT was unable to generate a pattern of behaviour from the sensor data. Theoretically, the assistant can only achieve results with much smaller, simpler and more frequent prompts. Thus, the risk of low-threshold data analysis of stolen smart home data does not yet exist with current language models.

ChatGPT as a security assistant

But where ChatGPT still has shortcomings, it can actually help smart home owners in other areas. Jüttner recently demonstrated this at the Erfindergeist Science Slam on the eve of this year’s National Conference on IT Security Research 2025. Under the title “Cybersecurity in the Smart Home”, he presented a self-developed ChatGPT assistant to help individuals defend against cyber attacks on smart home applications.

Networked smart appliances are a popular target for hackers. In addition to data theft, there is the risk of misuse in so-called botnets – networks of unnoticed infected devices remotely controlled by attackers. Hackers use these botnets to send massive data requests to servers, triggering large-scale cyber attacks that deliberately overload systems. The problem is that many users are unaware of how attackers misuse their devices or how they can protect themselves. This is where Jüttner’s idea comes in.

In his Slam, Jüttner presented his vision of a digital security assistant based on ChatGPT and developed specifically for smart homes. The idea: the AI-powered bot not only detects potential security vulnerabilities but also explains what to do in the event of an attack – in a simple, concrete way that even non-technical people can understand.

“With the help of ChatGPT, I have developed a security assistant for smart homes that not only informs users about attacks, but also provides concrete, easy-to-implement steps for independent problem solving. This allows everyone to regain control over their smart devices,” says Jüttner.

The system is designed to translate technical warnings into understandable language and provide quick instructions based on the user manual for the device in question. The goal is an assistance system that communicates at eye level – not with technical terms, but with quick, practical tips such as resetting to factory settings or disabling certain functions.

Victor Jüttner at FameLab Dresden on April 27, 2025

The next opportunity to experience Victor Jüttner’s research live is on April 27, 2025, when he will once again take the stage at the Dresden regional competition of the international science slam competition FameLab with his topic “Cybersecurity in Smart Homes”. You can find more information about the event on the TU Dresden website and in the program of the Staatsschauspiel Dresden.