December 15, 2025

ScaDS.AI at NeurIPS and EurIPS 2025

The 39th Annual Conference on Neural Information Processing Systems NeurIPS 2025 took place in San Diego, USA from December 2 -7. Several researchers from ScaDS.AI Dresden/Leipzig attended the conference and presented their research there. At the same time, researchers were present at the European counterpart to the conference, EurIPS, which took place in Copenhagen, DK, as a community-driven conference that showcases papers accepted at NeurIPS.

The conference in San Diego covered six full days with panels, talks, workshops and competitions as well as poster sessions covering various topics in the field of machine learning, artificial intelligence and computational neuroscience. EurIPS featured invited keynotes, paper presentations, poster sessions, as well as two days of workshops.

Contributions to NeurIPS and EurIPS 2025

The following topics were presented and discussed in workshops at NeurIPS:

Geometry matters: Insights from Ollivier Ricci Curvature and Ricci Flow into representational alignment.

Abstract: Representational similarity analysis (RSA) is widely used to analyze the alignment between humans and neural networks; however, conclusions based on this approach can be misleading without considering the underlying representational geometry. Our work introduces a framework using Ollivier Ricci Curvature and Ricci Flow to analyze the fine-grained local structure of representations. This approach is agnostic to the source of the representational space, enabling a direct geometric comparison between human behavioral judgments and a model’s vector embeddings. We apply it to compare human similarity judgments for 2D and 3D face stimuli with a baseline 2D-native network (VGG-Face) and a variant of it aligned to human behavior.

Our results suggest that geometry-aware analysis provides a more sensitive characterization of discrepancies and geometric dissimilarities in the underlying representations that remain only partially captured by RSA. Notably, we reveal geometric inconsistencies in the alignment when moving from 2D to 3D viewing conditions. This highlights how incorporating geometric information can expose alignment differences missed by traditional metrics, offering deeper insight into representational organization.

Authors: Nahid Torbati, Michael Gaebler, Simon M. Hofmann, Nico Scherf

This poster was discussed in the NeurIPS 2025 Workshop on Symmetry and Geometry in Neural Representations. Find the proceedings article and poster here.

On the Impact of Topological Regularization on Geometrical and Topological Alignment in Autoencoders: An Empirical Study.

Abstract: We present a comparative empirical study on the impact of topological regularization on autoencoders (AEs) and variational autoencoders (VAEs) across six synthetic datasets with known topology and curvature. Particularly, we probe the alignment of the topology and geometry of the dimensionality-reduced latent representation with that of the data. To quantify geometrical alignment, we estimate the mean extrinsic curvature of the latent embedding by fitting local quadrics. We find that topological regularization can significantly improve the geometrical alignment of latent and data, even when the training objective emphasizes topological alignment alone, without regard for reconstruction quality.

Authors: Samuel Graepler Nico Scherf, Anna Wienhard, Diaaeldin Taha

This topic was presented at the NeurIPS 2025 Workshop on Symmetry and Geometry in Neural Representations. Find the poster here.

Predicting Microbial Interactions Using Graph Neural Networks

Abstract: Predicting interspecies interactions is a key challenge in microbial ecology, as these interactions are critical to determining the structure and activity of microbial communities. In this work, we used data on monoculture growth capabilities, interactions with other species, and phylogeny to predict a negative or positive effect of interactions. More precisely, we used one of the largest available pairwise interaction datasets to train our models, comprising over 7,500 interactions between 20 species from two taxonomic groups co-cultured under 40 distinct carbon conditions, with a primary focus on the work of Nestor et al.\cite{nestor2023interactions}.

In this work, we propose Graph Neural Networks (GNNs) as a powerful classifier to predict the direction of the effect. We construct edge-graphs of pairwise microbial interactions in order to leverage shared information across individual co-culture experiments, and use GNNs to predict modes of interaction. Our model can not only predict binary interactions (positive/negative) but also classify more complex interaction types such as mutualism, competition, and parasitism. Our initial results were encouraging, achieving an F1-score of 80.44%. This significantly outperforms comparable methods in the literature, including conventional Extreme Gradient Boosting (XGBoost) models, which reported an F1-score of 72.76%.

Authors: Elham Gholamzadeh, Kajal Singla, Nico Scherf

The poster was discussed in the workshop New Perspectives in Graph Machine Learning at NeurIPS 2025. Find the poster here.

A Comparative Empirical Study of Relative Embedding Alignment in Neural Dynamical System Forecasters

Abstract: We study representation alignment in neural forecasters using anchor-based, geometry-agnostic \emph{relative embeddings} that remove rotational and scaling ambiguities, enabling robust cross-seed and cross-architecture comparisons. Across diverse periodic, quasi-periodic, and chaotic systems and a range of forecasters (MLPs, RNNs, Transformers, Neural ODE/Koopman, ESNs), we find consistent family-level patterns: MLPs align with MLPs, RNNs align strongly, Transformers align least with others, and ESNs show reduced alignment on several chaotic systems. Alignment generally tracks forecasting accuracy—higher similarity predicts lower multi-step MSE—yet strong performance can occur with weaker alignment (notably for Transformers). Relative embeddings thus provide a practical, reproducible basis for comparing learned dynamics.

Authors: Deniz Kucukahmetler, Maximilian Jean Hemmann, Julian Mosig von Aehrenfeld, Maximilian Amthor, Christian Deubel, Nico Scherf, Diaaeldin Taha

The topic ws presented and discussed in the NeurIPS 2025 Workshop on Symmetry and Geometry in Neural Representations. At the same time, it was presented at EurIPS 2025 in Copenhagen. Find the poster here.

Furthermore the following posters were presented during the poster sessions of NeurIPS 2025:

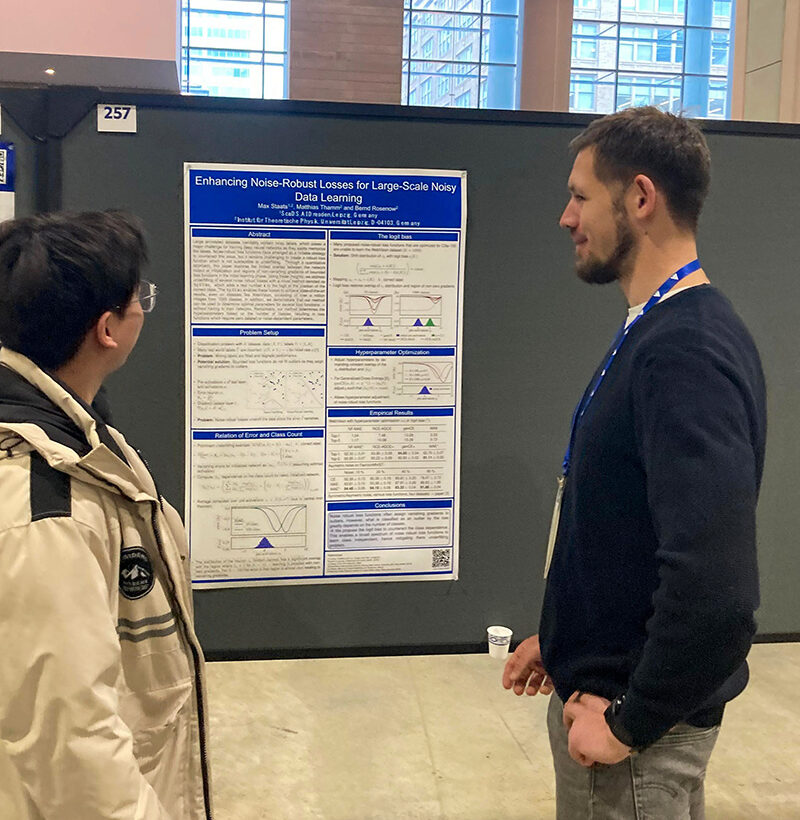

Small Singular Values Matter: A Random Matrix Analysis of Transformer Models

Abstract: This work analyzes singular-value spectra of weight matrices in pretrained transformer models to understand how information is stored at both ends of the spectrum. Using Random Matrix Theory (RMT) as a zero information hypothesis, we associate agreement with RMT as evidence of randomness and deviations as evidence for learning. Surprisingly, we observe pronounced departures from RMT not only among the largest singular values – the usual outliers – but also among the smallest ones.

A comparison of the associated singular vectors with the eigenvectors of the activation covariance matrices shows that there is considerable overlap wherever RMT is violated. Thus, significant directions in the data are captured by small singular values and their vectors as well as by the large ones. We confirm this empirically: zeroing out the singular values that deviate from RMT raises language-model perplexity far more than removing values from the bulk, and after fine-tuning the smallest decile can be the third most influential part of the spectrum. To explain how vectors linked to small singular values can carry more information than those linked to larger values, we propose a linear random-matrix model. Our findings highlight the overlooked importance of the low end of the spectrum and provide theoretical and practical guidance for SVD-based pruning and compression of large language models.

Authors: Max Staats, Matthias Thamm, Bernd Rosenow

Find the poster here.

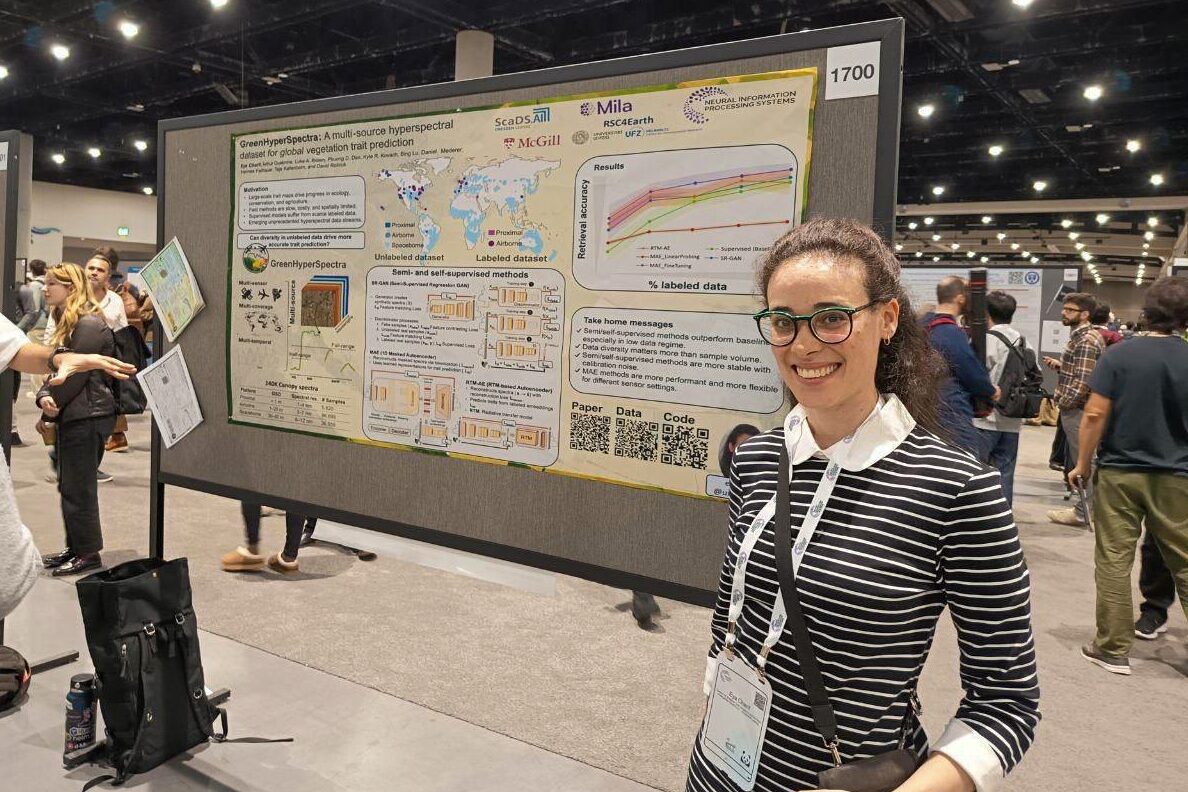

GreenHyperSpectra: A multi-source hyperspectral dataset for global vegetation trait prediction

Abstract: Plant traits such as leaf carbon content and leaf mass are essential variables in the study of biodiversity and climate change. However, conventional field sampling cannot feasibly cover trait variation at ecologically meaningful spatial scales. Machine learning represents a valuable solution for plant trait prediction across ecosystems, leveraging hyperspectral data from remote sensing. Nevertheless, trait prediction from hyperspectral data is challenged by label scarcity and substantial domain shifts (\eg across sensors, ecological distributions), requiring robust cross-domain methods.

Here, we present GreenHyperSpectra, a pretraining dataset encompassing real-world cross-sensor and cross-ecosystem samples designed to benchmark trait prediction with semi- and self-supervised methods. We adopt an evaluation framework encompassing in-distribution and out-of-distribution scenarios. We successfully leverage GreenHyperSpectra to pretrain label-efficient multi-output regression models that outperform the state-of-the-art supervised baseline. Our empirical analyses demonstrate substantial improvements in learning spectral representations for trait prediction, establishing a comprehensive methodological framework to catalyze research at the intersection of representation learning and plant functional traits assessment.

Authors: Eya Cherif, Arthur Ouaknine, Luke A. Brown, Phuong D. Dao, Kyle R Kovach, Bing Lu, Daniel Mederer, Hannes Feilhauer, Teja Kattenborn, David Rolnick

This poster was presented at the NeurIPS 2025 Datasets and Benchmarks Track poster session. Find the poster here.