August 7, 2024

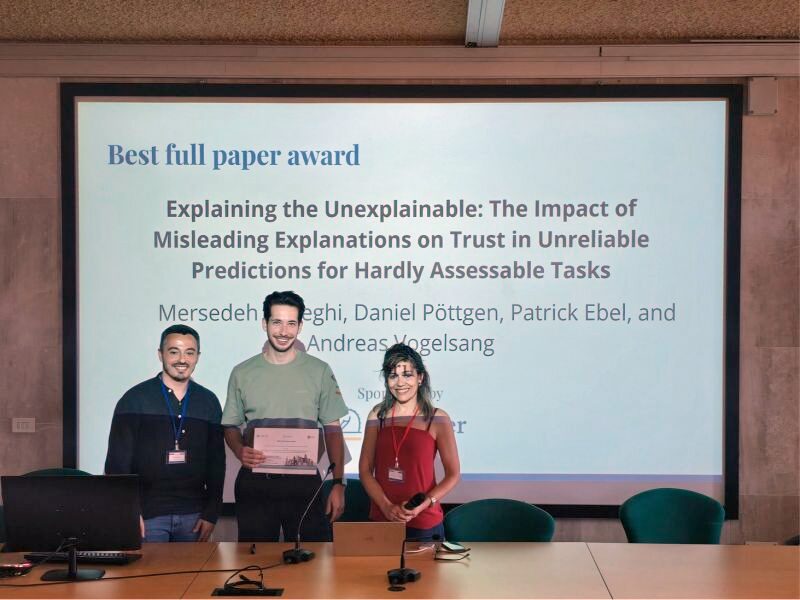

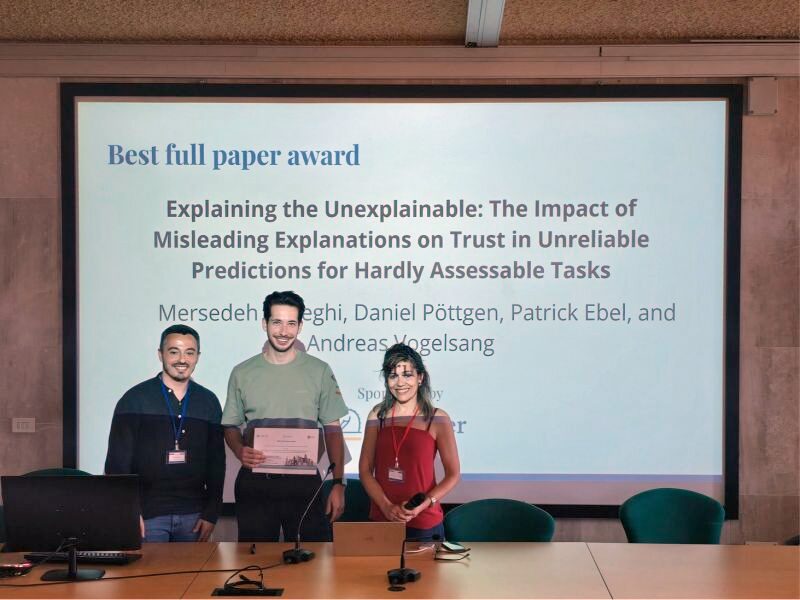

Patrick Ebel wins Best Paper Award at UMAP24

We are happy to announce that Patrick Ebel, leader of the ScaDS.AI Dresden/Leipzig junior research group “Computational Interaction and Mobility” (CIAO), and his colleagues Mersede Sadeghi, Daniel Pöttgen, and Andreas Vogelsang won the Best Paper Award at UMAP’24.

The paper, titled “Explaining the Unexplainable: The Impact of Misleading Explanations on Trust in Unreliable Predictions for Hardly Assessable Tasks” examines how explanations affect people’s trust in computer systems, especially when those systems frequently make mistakes. A survey of 162 people found that people trust a system more when there is an explanation for its decisions, even when that explanation is misleading. The Participants were then more likely to accept the system’s decisions. The study highlights the importance of carefully crafting explanations to avoid misleading users. The goal is to build user trust by providing honest, practical and helpful explanations.

Junior Research Group “Computational Interaction and Mobility” (CIAO)

The research group of Patrick Ebel aims to create computational models that interact humanely with technology and make humane decisions. The core topics include analysis of large naturalistic driving data, distraction research, and computational models of human-computer interaction. Another focus is the data-driven evaluation of user interfaces. Explainability always plays an important role in their work.

“We want to develop methods and models that help us to better understand human interaction with intelligent technology and to design systems that work optimally with people. To do this, we need to understand why machines behave the way they do. We hope that this award will serve as a basis for future research and collaboration in this field.” – Patrick Ebel

ACM UMAP Conference

ACM UMAP is the top international conference for researchers and practitioners in user modeling and personalization. This year’s UMAP conference took place from July 1-4 in Cagliari, Sardinia, Italy. The conference focused on user modeling, personalization, and adaptation of intelligent systems. Topics included the impact of large language models and generative AI on user behavior and trust. It also explored the need for new models to create sustainable and inclusive services addressing critical global challenges.