July 18, 2025

ScaDS.AI Dresden/Leipzig at SIGIR and ICTIR 2025

From July 13 to 17, 2025 the 48th International ACM SIGIR Conference on Research and Development in Information Retrieval took place in Padua, Italy. It was followed by ICTIR 2025 (The 15th International Conference on Innovative Concepts and Theories in Information Retrieval) on

July 18 at the same premises. Researchers from ScaDS.AI Dresden/Leipzig joined both conferences and presented their research with a workshop, a keynote and posters.

About SIGIR and ICTIR 2025

True to its name, SIGIR focuses on research and development in the field of information retrieval. It is the most important international forum for the presentation of new research results and for the demonstration of new systems and techniques in this field. On the five conference days researchers could present a variety of papers and system demonstrations. They were also able to take part in the doctoral consortium, tutorials, and corresponding workshops.

The one-day conference ICTIR, deals with innovative concepts and theories and complements the SIGIR program without causing any overlap. This year, ICTIR focused primarily on the topic “LLMs + IR, what could possibly go wrong?”. It welcomed research work in this area, as well as interdisciplinary research work that combines information retrieval with other theoretically motivated research disciplines.

Contributions from ScaDS.AI Dresden/Leipzig to SIGIR 2025

ScaDS.AI Dresden/Leipzig was represented at SIGIR with four papers and a workshop. Particular mention should be made of the paper “The Viability of Crowdsourcing for RAG Evaluation”, which received the Best Paper Honourable Mention Award at SIGIR 2025.

Congratulations to Lukas Gienapp (University of Kassel, hessian.AI, ScaDS.AI Dresden/Leipzig), Tim Hagen (University of Kassel, hessian.AI), Maik Fröbe (Friedrich-Schiller-Universität Jena), Matthias Hagen (Friedrich-Schiller-Universität Jena), Benno Stein (Bauhaus-Universität Weimar), Martin Potthast (University of Kassel, hessian.AI, and ScaDS.AI Dresden/Leipzig), and Harrisen Scells (University of Tübingen).

ScaDS.AI Dresden/Leipzig contributed to the following topics at SIGIR 2025:

In 48th International ACM SIGIR Conference on Research and Development in Information Retrieval (SIGIR 2025), July 2025. ACM.

Authors: Sebastian Bruch, Maik Fröbe, Tim Hagen, Franco Maria Nardini, and Martin Potthast.

Type: Workshop

Abstract: Measuring effectiveness and efficiency in information retrieval has a strong empirical background. While modern retrieval systems substantially improve effectiveness, the community has not yet agreed on how to measure efficiency, making it difficult to contrast effectiveness and efficiency fairly. Efficiency-oriented system comparisons are difficult due to factors such as hardware configurations, software versioning, and experimental settings. Efficiency affects users, researchers, and the environment and can be measured in many dimensions beyond time and space, such as resource consumption, water usage, and sample efficiency. Analyzing the efficiency of algorithms and their trade-off with effectiveness requires revisiting and establishing new standards and principles, from defining relevant concepts to designing new measures and guidelines to assess the findings’ significance. ReNeuIR’s fourth iteration aims to bring the community together to debate these questions and collaboratively test and improve benchmarking frameworks for efficiency based on discussions and collaborations of its previous iterations, including a shared task focused on efficiency and reproducibility

In 48th International ACM SIGIR Conference on Research and Development in Information Retrieval (SIGIR 2025), July 2025. ACM.

Authors: Maik Fröbe, Andrew Parry, Ferdinand Schlatt, Sean MacAvaney, Benno Stein, Martin Potthast, and Matthias Hagen

Type: Short Paper

Abstract: Relevance judgments can differ between assessors, but previous work has shown that such disagreements have little impact on the effectiveness rankings of retrieval systems. This applies to disagreements between humans as well as between human and large language model~(LLM) assessors. However, the agreement between different LLM~assessors has not yet been systematically investigated. To close this gap, we compare eight LLM~assessors on the TREC DL tracks and the retrieval task of the RAG track with each other and with human assessors. We find that the agreement between LLM~assessors is higher than between LLMs and humans and, importantly, that LLM~assessors favor retrieval systems that use LLMs in their ranking decisions: our analyses with 30-50 retrieval systems show that the system rankings obtained by LLM~assessors overestimate LLM-based re-rankers by 9~to 17~positions on average.

In 48th International ACM SIGIR Conference on Research and Development in Information Retrieval (SIGIR 2025), July 2025. ACM.

Authors: Lukas Gienapp, Tim Hagen, Maik Fröbe, Matthias Hagen, Benno Stein, Martin Potthast, and Harrisen Scells

Type: Full Paper

Abstract: How good are humans at writing and judging responses in retrieval-augmented generation (RAG) scenarios? To answer this question, we investigate the efficacy of crowdsourcing for RAG through two complementary studies: response writing and response utility judgment. Our new Webis Crowd RAG Corpus 2025 (Webis-CrowdRAG-25) consists of 903 human-written and 903 LLM-generated responses for the 301 topics of the TREC 2024 RAG track, with each response composed according to one of the three discourse styles ‘bullet list’, ‘essay’, or ‘news’. For a selection of 65 topics, the corpus further contains 47,320 pairwise human judgments and 10,556 pairwise LLM judgments across seven utility dimensions (e.g., coverage andcoherence). Our analyses give insights into human writing behavior for RAG and the viability of crowdsourcing for RAG evaluation. We find that human pairwise judgments provide reliable and cost-effective results. This is much less the case for LLM-based pairwise and human/LLM-based pointwise judgments, nor for automated comparisons with human-written reference responses.

In 48th International ACM SIGIR Conference on Research and Development in Information Retrieval (SIGIR 2025), July 2025. ACM.

Authors: Tim Hagen, Maik Fröbe, Jan Heinrich Merker, Harrisen Scells, Matthias Hagen, and Martin Potthast.

Type: Resource Paper

Abstract: The reproducibility and transparency of retrieval experiments depends on the availability of information about the experimental setup. However, the manual collection of experiment metadata can be tedious, error-prone, and inconsistent, which calls for an automated systematic collection. Expanding ir_metadata, we present the TIREx tracker, a tool that records hardware configurations, power/CPU/RAM/GPU usage, and experiment/system versions. Implemented as a lightweight platform-independent C binary, the TIREx tracker integrates seamlessly into Python, Java, or C/C++ workflows and can be easily integrated into shard task submissions, as we demonstrate for the TIRA/TIREx platform.

In 48th International ACM SIGIR Conference on Research and Development in Information Retrieval (SIGIR 2025), July 2025. ACM.

Authors: Ferdinand Schlatt, Tim Hagen, Martin Potthast, and Matthias Hagen

Type: Full Paper

Abstract: Transformer-based retrieval approaches typically use the contextualized embedding of the first input token as a dense vector representation for queries and documents. The embeddings of all other tokens are also computed but then discarded, wasting resources. In this paper, we propose the Token-Independent Text Encoder (TITE) as a more efficient modification of the backbone encoder model. Using an attention-based pooling technique, TITE iteratively reduces the sequence length of hidden states layer by layer so that the final output is already a single sequence representation vector. Our empirical analyses on the TREC Deep Learning 2019 and 2020 tracks and the BEIR benchmark show that TITE is on par in terms of effectiveness compared to standard bi-encoder retrieval models while being up to 2.8 times faster at encoding queries and documents.

Contributions from ScaDS.AI Dresden/Leipzig to ICTIR 2025

Prof. Dr. Martin Potthast (PI at ScaDS.AI Dresden/Leipzig) presented two papers with different research groups at ICTIR 2025. One of them received a Best Paper Honourable Mention Award for their research on “Axioms for Retrieval-Augmented Generation”. Congratulations to Jan Heinrich Merker (Friedrich-Schiller-Universität Jena), Maik Fröbe, Benno Stein, Martin Potthast, and Matthias Hagen.

Furthermore Prof. Potthast held one of the two keynotes at ICTIR, answering the following questions: “Who writes the web? Who reads it? Who judges it? And who reports back to us? About authenticity in information retrieval.” Find more info about the content of his keynote here.

The papers that were presented at ICTIR:

In 15th International Conference on Innovative Concepts and Theories in Information Retrieval (ICTIR 2025), July 2025.

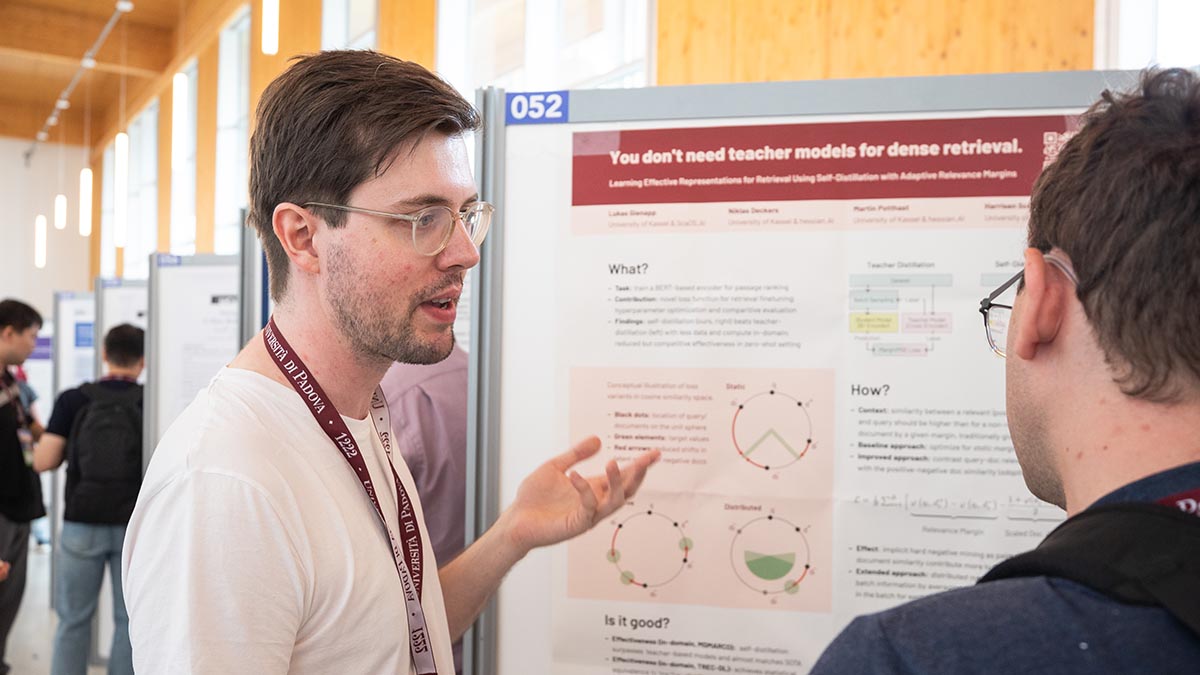

Authors: Lukas Gienapp, Niklas Deckers, Martin Potthast, and Harrisen Scells

Type: Full Paper

Abstract: Representation-based retrieval models, so-called biencoders, estimate the relevance of a document to a query by calculating the similarity of their respective embeddings. Current state-of-the-art biencoders are trained using an expensive training regime involving knowledge distillation from a teacher model and batch-sampling. Instead of relying on a teacher model, we contribute a novel parameter-free loss function for self-supervision that exploits the pre-trained language modeling capabilities of the encoder model as a training signal, eliminating the need for batch sampling by performing implicit hard negative mining. We investigate the capabilities of our proposed approach through extensive ablation studies, demonstrating that self-distillation can match the effectiveness of teacher distillation using only 13.5% of the data, while offering a speedup in training time between 3x and 15x compared to parametrized losses. Code and data is made openly available.

In 15th International Conference on Innovative Concepts and Theories in Information Retrieval (ICTIR 2025), July 2025.

Authors: Jan Heinrich Merker, Maik Fröbe, Benno Stein, Martin Potthast, and Matthias Hagen

Type: Full Paper

Abstract: Information retrieval axioms are formalized constraints that good retrieval models should fulfill, e.g., to rank documents higher that contain the query terms more often. Over the last decades, more than 25 such axioms have been formalized and used to analyze, to improve, or to explain retrieval models. However, those axioms were designed for retrieval scenarios with document rankings as output and thus do not directly fit the new scenario of retrieval-augmented generation systems (RAG). To close this gap, we rethink retrieval axioms in the RAG scenario. First, we try to transfer the underlying ideas of as many of the traditional axioms as possible to the newRAG setting (18 axioms can be transferred), and second, we suggest and formalize 11 new axioms specifically tailored towards generated answer utility. In experiments on the TREC 2024 RAG track data and on the CrowdRAG-25 corpus, we show that the new axioms more accurately capture automated and human RAG preferences than the transferred traditional axioms. Furthermore, we also illustrate practical applications of axioms for RAG by inspecting preferences of language models, and by aiding human preference judgments. All our code and data is made publicly available.