Learning Support for Ontology-Mediated Querying

Title: Learning Support for Ontology-Mediated Querying

Research Area: Knowledge Representation and Learning

Ontology-Mediated Querying is a popular paradigm for injecting ontological knowledge into data-based applications, providing a path towards more intelligent querying and more complete answers. In the project Learning Support for Ontology-Mediated Querying, we combine ontology-mediated querying with machine learning approaches, in two different ways. On the one hand, we exploit techniques from computational learning theory to provide support to Ontology-Mediated Querying users such as assisting them to construct the desired queries and ontologies, either from labeled data examples or by `interviewing’ the user about the properties of the object that they aim to construct. On the other hand, we extend the expressive power of ontology-mediated queries to support features that are relevant to machine learning and data analytics applications such as counting and aggregation.

Aims

The project Learning Support for Ontology-Mediated Querying aims to study two kinds of learning scenarios. In the first one, called learning from examples, a set of positively and negatively labeled data examples is given, that is, answers and non-answers to the query to be formulated. One then seeks a fitting query, meaning a query that classifies all given examples correctly. The second scenario is Angluin’s framework of exact learning, where a learning algorithm actively presents examples to the user and asks for their status (positive or negative).

Problem

Important problems studied in this project include:

- When is learning possible in polynomial time, with polynomially many examples (in learning from examples), or with polynomially many queries to the user (in Angluin’s framework)?

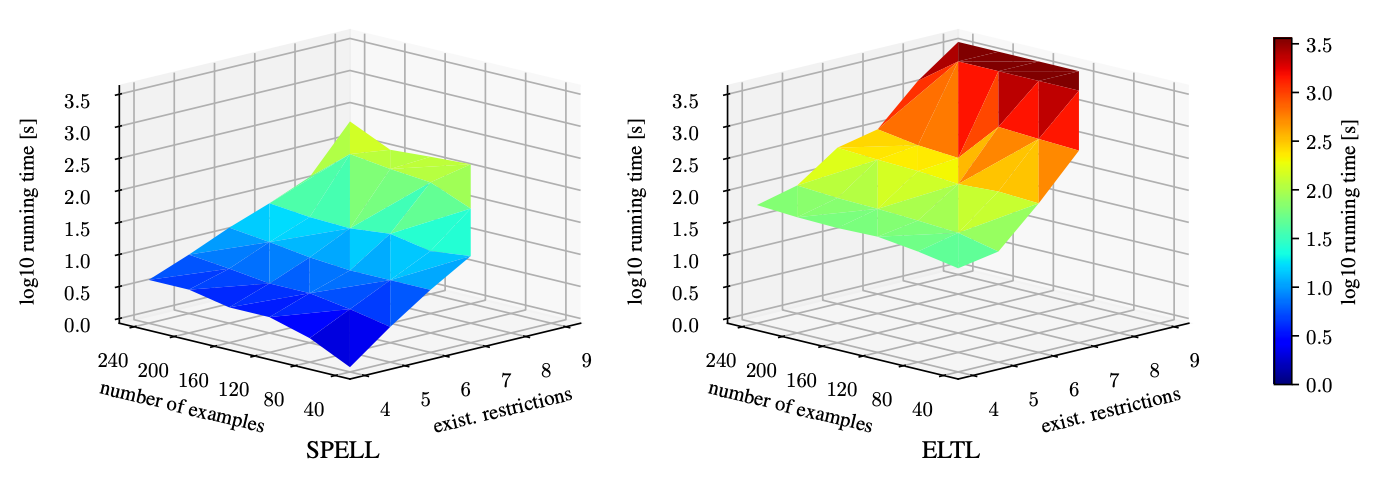

- If polynomial time cannot be attained, can we leverage the efficiency of SAT solvers to solve the fitting problem when learning from examples?

- How can we guarantee that the constructed queries generalize to unseen data examples in the sense of PAC learning?

- In the (usual) case that there are multiple queries that fit the examples, which one should be constructed? Do logically most general or most specific fitting queries always exist?

Technology

The project uses a rich mix of techniques that originally stem from the following areas: computational learning theory, database theory, graph theory, knowledge representation, and constraint satisfaction.

Outlook

We expect the project to significantly increase the usability of ontologies in data-centric applications. In addition, we believe that the mix of symbolic KR methods and learning techniques that is at the heart of the project is very attractive and hope that it will open up further research avenues in the future.

Publications

- Balder ten Cate, Maurice Funk, Jean Christoph Jung, Carsten Lutz: On the non-efficient PAC learnability of conjunctive queries. Inf. Process. Lett. 183: 106431 (2024)

- Balder ten Cate, Maurice Funk, Jean Christoph Jung, Carsten Lutz: SAT-Based PAC Learning of Description Logic Concepts. IJCAI 2023: 3347-3355

- Balder ten Cate, Victor Dalmau, Maurice Funk, Carsten Lutz: Extremal Fitting Problems for Conjunctive Queries. PODS 2023: 89-98

- Maurice Funk, Jean Christoph Jung, Carsten Lutz: Frontiers and Exact Learning of ELI Queries under DL-Lite Ontologies. IJCAI 2022: 2627-2633

- Maurice Funk, Jean Christoph Jung, Carsten Lutz: Actively Learning Concepts and Conjunctive Queries under ELr-Ontologies. IJCAI 2021: 1887-1893

Team

Lead

Prof. Dr. Carsten Lutz

Team Members

- Quentin Maniere

- Maurice Funk

Partners

- Jean Christoph Jung, TU Dortmund

- Balder ten Cate, University of Amsterdam