July 15, 2024

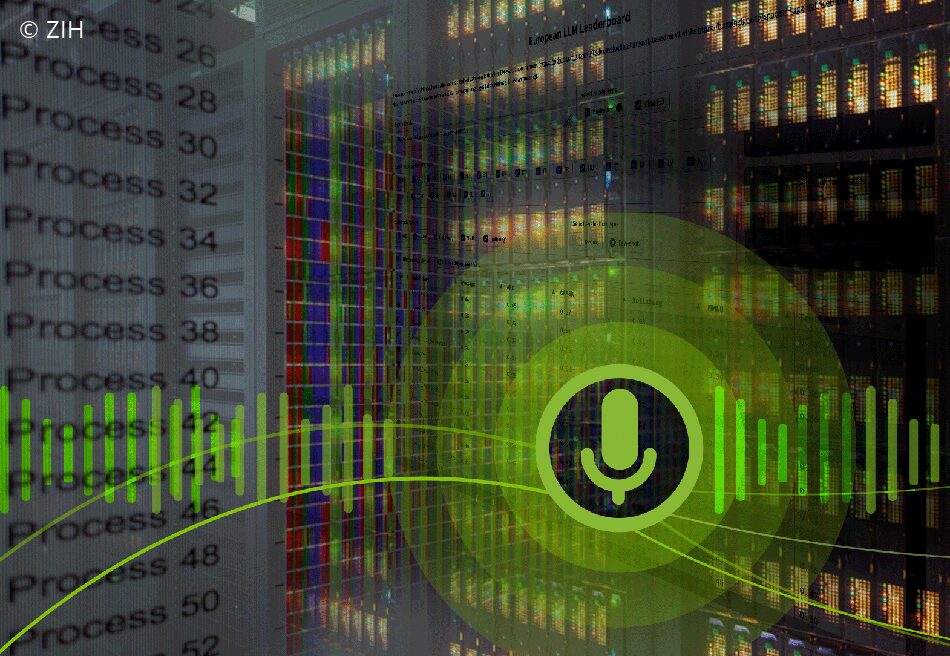

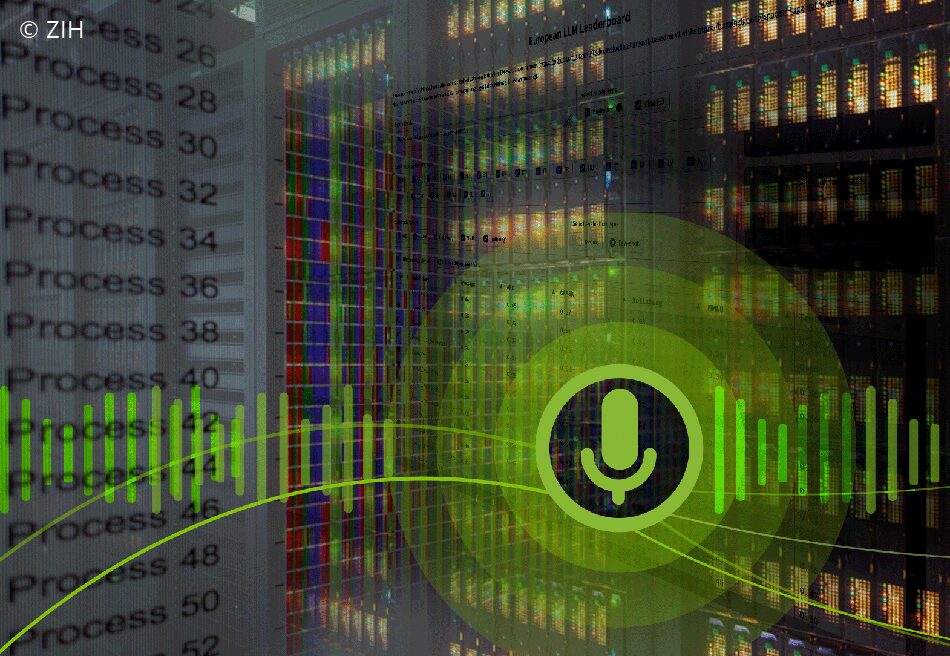

OpenGPT-X Team publishes European LLM Leaderboard

Multilingual Language Models Promote More Versatile Approaches in Language Technology

The digital processing of natural language has advanced considerably in recent years thanks to the spread of open source Large Language models (LLMs). Given the significant social impact of this development, there is an urgent need to improve support for multilinguality. Scientists at TU Dresden, along with ten partners from business, science, and media, are supporting this development through the BMWK project OpenGPT-X, which has been running since 2022. The project team has now published a multilingual leaderboard that compares several publicly available state-of-the-art language models, each comprising around 7 billion parameters.

Advancing LLM Research with Powerful Multilingual Benchmarks

While the majority of benchmarks for evaluating language models are mainly available for the English language, the OpenGPT-X consortium aimed to significantly broaden language accessibility for multilingualism, thereby paving the way for more versatile and effective language technology. To reduce language barriers in the digital domain, the scientists carried out extensive multilingual training runs and tested the developed AI models on tasks such as logical reasoning, commonsense understanding, multi-task learning, truthfulness and translation.

When developing LLMs, it is important that training and evaluation go hand in hand. To enable comparability across multiple languages, common benchmarks such as ARC, HellaSwag, TruthfulQA, GSM8K and MMLU were machine-translated into 21 of the 24 supported European languages using DeepL. In addition, two further multilingual benchmarks that were already available for the languages considered in the project were added to the leaderboard.

It is planned to use the leaderboard to automate the evaluation of models from the AI platform Hugging Face Hub, to ensure the traceability and comparability of the results. TU Dresden will provide the necessary infrastructure for running the evaluation jobs on the HPC cluster. Following the current release of the European LLM Leaderboard, the OpenGPT-X models will be published this summer and will also be visible there, aligning with one of the core goals of OpenGPT-X, which is to make the benefits of these AI language models accessible to a wider audience in Europe and beyond while supporting a large number of European languages. This progress is particularly important for languages that are traditionally underrepresented in the field of natural language processing.

Combined Big Data, AI and HPC Expertise at TUD Dresden University of Technology

With the expertise of the two competence centers, ScaDS.AI Dresden/Leipzig (Center for Scalable Data Analytics and Artificial Intelligence) and ZIH (Information Services and High-Performance Computing) at TUD Dresden University of Technology, OpenGPT-X has a cooperation partner that consolidates expertise in training and evaluating large language models on supercomputing clusters. The joint efforts will focus on several critical tasks, including developing scalable evaluation pipelines, integrating various benchmarks, and performing comprehensive evaluations on supercomputing clusters. Additionally, the team will work on improving model performance, scalability and efficiency, continuously monitoring the impact of pre-training and fine-tuning, and leveraging innovative high-performance computing resources.

Overview of the Benchmarks Translated and Employed in the Project

- ARC and GSM8K focus on general education and mathematics.

- HellaSwag and TruthfulQA test the ability of models to provide plausible continuations and truthful answers.

- MMLU provides a wide range of tasks to assess the ability of models to perform in a variety of domains and tasks.

- While FLORES-200 is aimed at assessing machine translation skills, Belebele focuses on understanding and answering questions in multiple languages.

Funding and Project Management

The OpenGPT-X project has been funded by the BMWK since 2022 and is largely coordinated by the Fraunhofer Institute for Intelligent Analysis and Information Systems (IAIS).

Contact

IAIS – Dr. Nico Flores-Herr, Dr. Michael Fromm

TUD Dresden University of Technology, ScaDS.AI Dresden/Leipzig: Dr. René Jäkel, Klaudia-Doris Thellmann

Publications

- Ali, Mehdi, Fromm, Michael, Thellmann, Klaudia, Rutmann, Richard, Lübbering, Max, Leveling, Johannes, Klug, Katrin, Ebert, Jan, Doll, Niclas, Buschhoff, Jasper, Jain, Charvi, Weber, Alexander, Jurkschat, Lena, Abdelwahab, Hammam, John, Chelsea, Ortiz Suarez, Pedro, Ostendorff, Malte, Weinbach, Samuel, Sifa, Rafet, Kesselheim, Stefan, & Flores-Herr, Nicolas. (2024). Tokenizer Choice For LLM Training: Negligible or Crucial? In K. Duh, H. Gomez, & S. Bethard (Eds.), Findings of the Association for Computational Linguistics: NAACL 2024 (pp. 3907-3924). Mexico City, Mexico: Association for Computational Linguistics. Retrieved from https://aclanthology.org/2024.findings-naacl.247

- Weber, Alexander Arno, Thellmann, Klaudia, Ebert, Jan, Flores-Herr, Nicolas, Lehmann, Jens, Fromm, Michael, & Ali, Mehdi. (2024). Investigating Multilingual Instruction-Tuning: Do Polyglot Models Demand for Multilingual Instructions? arXiv. Retrieved from https://arxiv.org/abs/2402.13703