Computational Interaction and Mobility (CIAO)

Title: Computational Interaction and Mobility (CIAO)

Project duration: 3+3 years (started in August 2023, mid-term evaluation in 2026)

Research Area: Engineering and Business

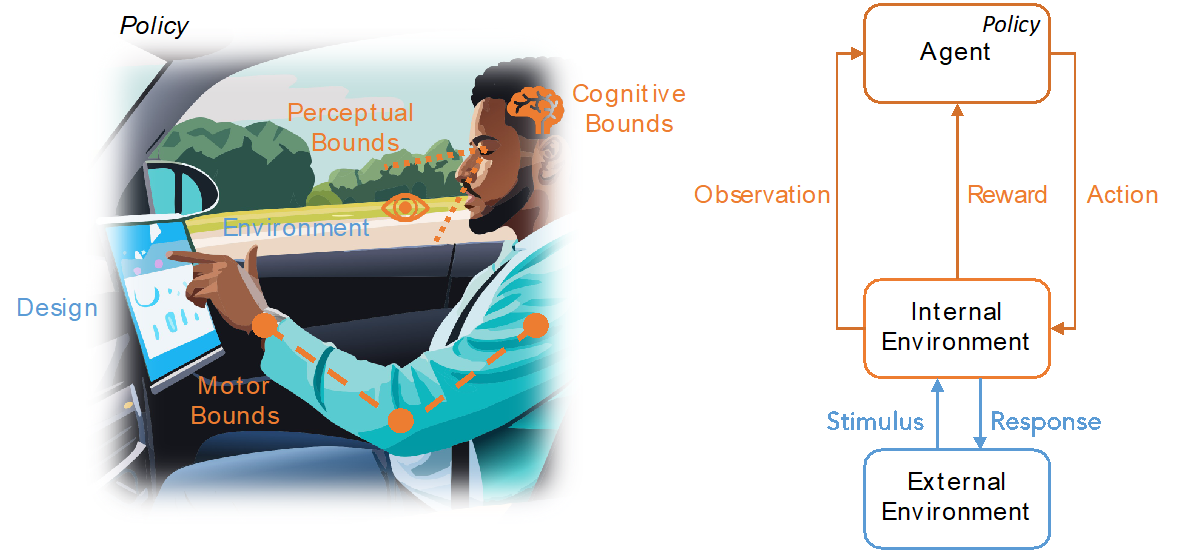

The Junior Research Group Computational Interaction and Mobility (CIAO) works at the intersection of machine learning, human-computer interaction, and mobility. We develop computational models that simulate human-like interaction behavior. These models can be used to evaluate user interfaces, predict how users interact with them and allow designers and researcher to better understand not only the users but also their design. We develop models that are either data-driven (using usage data collected from customers) or based on computational rationality.

Problem

At CIAO, we strongly believe that the design, development, and evaluation of intelligent mobile devices (which includes cars) is not yet human-centered enough. This leads to decisions that are detached from customer needs and real-world problems. We want to rethink the current design process. Data-driven insights and machine learning-based user modeling need to be an integral part of the product development and evaluation process. Only by doing so, one can account for the variety of individual and contextual factors and build systems that are easy and safe to use for all users in all situations.

Aims

We aim to contribute to a better understanding of how humans interact with intelligent mobile interfaces and transportation systems by developing new data-driven user models and evaluation methods (1) based on supervised machine learning and (2) based on the theory of computational rationality and reinforcement learning.

Technology

The user models we build are either based on supervised machine learning methods if they are data-driven, or on reinforcement learning if they are computationally rational models of user behavior.

In addition to user modeling, we are also developing a mixed reality driving simulator based on the work of some of our colleagues at TU Eindhoven. The driving simulator is developed using the Unity game engine and will serve as a tool to evaluate our models and compare their predictions with empirical results from user studies.

Outlook

In the long run, we hope that our models will contribute to a better understanding of how humans interact with (intelligent) technology. The knowledge generated by our models helps designers and developers to create systems that respect human limitations (cognitive, motor, perceptual), which is especially important given the paradigm shift towards human-AI collaboration.

Publications

Three example publications from before founding the group:

- P. Ebel, C. Lingenfelder, and A. Vogelsang, “On the forces of driver distraction: Explainable predictions for the visual demand of in-vehicle touchscreen interactions,” Accident Analysis & Prevention, vol. 183, p. 106956, Apr. 2023

- P. Ebel, C. Lingenfelder, and A. Vogelsang, “Multitasking while driving: How drivers self-regulate their interaction with in-vehicle touchscreens in automated driving,” International Journal of Human–Computer Interaction, pp. 1–18, 2023

- P. Ebel, J. Orlovska, S. Hünemeyer, C. Wickman, A. Vogelsang, and R. Söderberg, “Automotive UX design and data-driven development: Narrowing the gap to support practitioners,” Transportation Research Interdisciplinary Perspectives, vol. 11, p. 100455, Sep. 2021

Team

Lead

- Dr. Patrick Ebel

Team Members

- Martin Lorenz

- Juliette-Michelle Burkhardt

- Timur Getselev