Fundamental Methods for AI

Machine Learning for Evolving Graph Data (Prof. Rahm)

The research on Machine Learning methods for graphs is mostly restricted to static graph data, although most graphs change considerably over time. Therefore, it requires the computation of embeddings which encode the temporary information of a graph. However, Representation Learning for temporal graphs is largely unexplored. Our goals in this research area are:

- Integration and research on foundations for temporal graphs and Representation Learning within the graph analytics framework GRADOOP

- Build foundations of a graph streaming system that integrates methods for stream-based graph mining and learning

- Investigate incremental graph mining and learning techniques such as graph sketches, incremental grouping, incremental Representation Learning and incremental Frequent Pattern Mining

- Application of graph stream analytics to be able to perform root cause analysis and anomaly detection

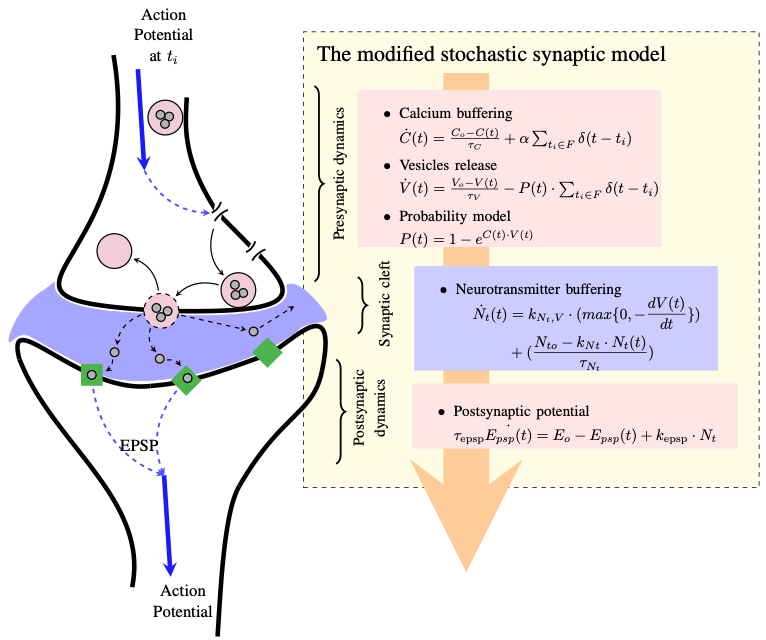

Neuromorphic Information Processing for Intelligent and Cognitive Data Processing (Prof. Bogdan)

Real neuromorphic information processing requires models closer to the neurological dynamical system. In this context, so-called Spiking Neural Networks (SNN), which are based on Integrate-and-Fire (IaF) neurons, already outperform Deep Learning, e.g. in recognition of handwritten patterns. However, SNN still lacks reliable and universal training algorithms and dynamic changes in a trained network are not yet possible. This is due to the fact that the synaptic plasticity required here is still not implemented in IaF neurons. With our research we envision dynamic synapses based on the Modified Stochastic Synaptic Model (MSSM) (K. Ellaithy and M.Bogdan 2017).

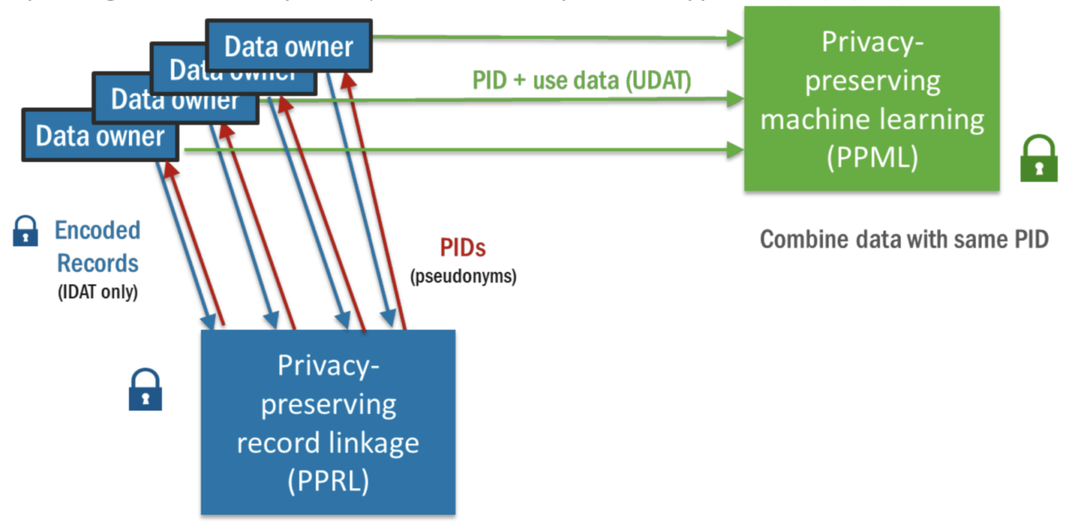

Privacy-Preserving Machine Learning (Prof. Rahm)

When analyzing person-related data with machine learning methods, a high degree of privacy must be guaranteed such that the identity of individual persons is not revealed. This is especially challenging when the person-related data from multiple sources is combined, e.g. user profiles and patient data including clinical and genomic data. In our research we are working on solving this problem of record linkage and a combination with Machine Learning techniques.

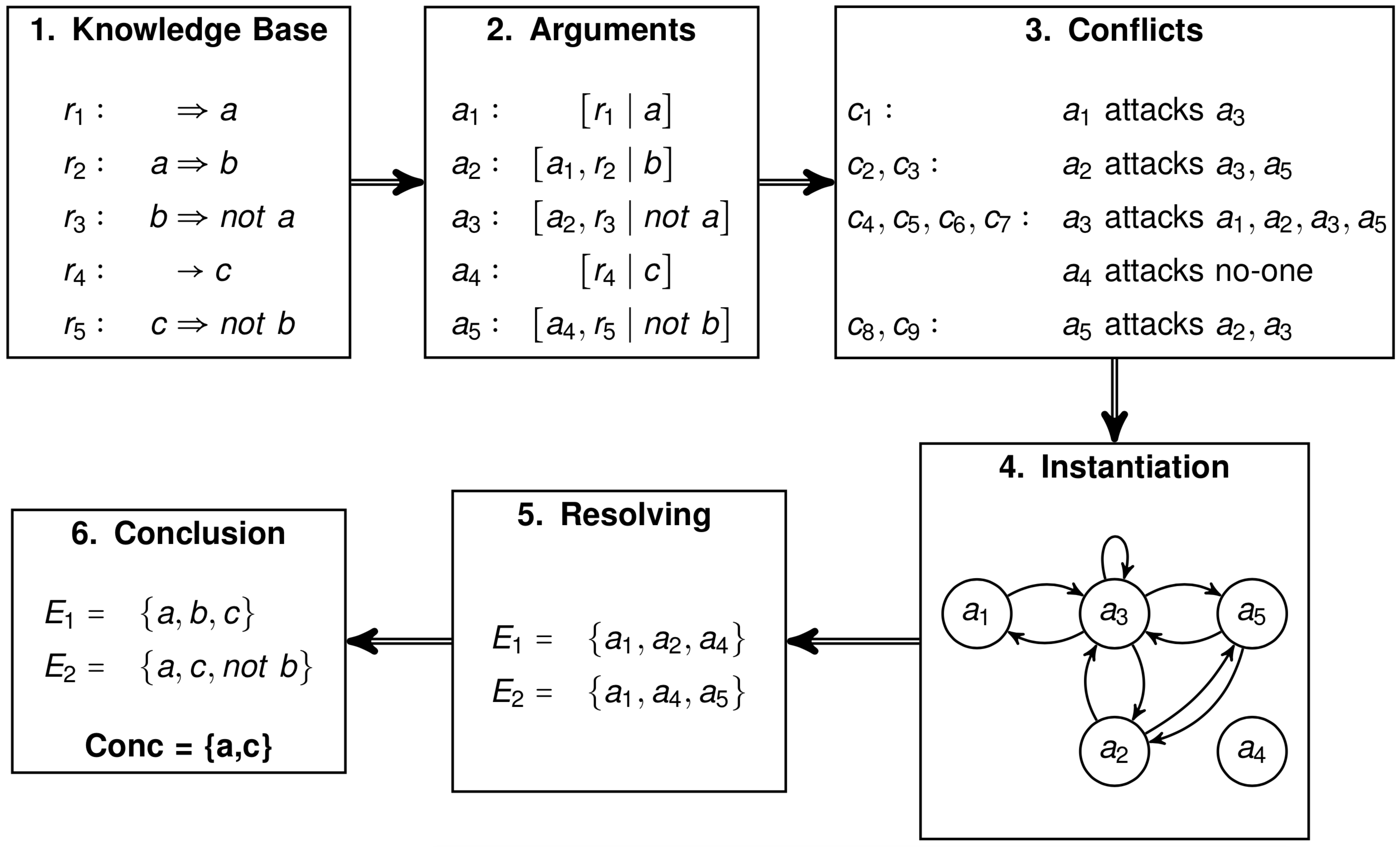

Argument-based Explanations for Trusted AI (Dr. habil. Baumann, Prof. Brewka)

The explainability of AI systems is not just a desirable add-on, but a legal requirement. Recently, there is a strong focus in AI on computational models of argumentation. They not only allow to identify arguments and their relationships in texts or formal knowledge bases, but also to represent these findings in an argument graph and to evaluate these graphs to identify reasonable argument sets. This ultimately helps to explore and understand the conclusions made. In our research we aim to exploit the progress in the field to generate convincing explanations with the following options:

- Translate the representation formalism used in the argument graph and benefit from the graph’s explanation facilities

- Install an argumentation framework as a monitoring system for an underlying AI system