Legal and Society

Legal Challenges and Solutions in Big Data Technologies: Privacy by Design (Prof. Müller-Mall)

Big Data and AI technologies play an increasingly important role in both individual lives and society. However, the omnipresence of these technologies entails numerous risks with regard to data protection and data security. The developing technology can therefore not be separated from a further development and investigation of legal framework conditions.

We aim for a conceptual elaboration of the Privacy-by-Design approach, which is, among others, anchored in the European General Data Protection Regulation. This comprises the identification of eminent tensions between data protection principles and the demands of Big Data / AI technologies, the inventory and legal evaluation of currently available privacy enhancing technologies and the modelling of an enhanced Privacy-by-Design approach tailored to Big Data/AI requirements.

Transparency as a Fundamental Principle of Trustworthy AI: Regulatory Framework and Challenges (Prof. Lauber-Rönsberg)

As part of our research, we are addressing two fundamental issues related to trustworthy AI. On the one hand, there are the ethical guidelines. Here we see transparency as a key requirement that AI systems should meet in order to be deemed trustworthy. This applies not only to implementation, where humans need to be aware that they are interacting with an AI system but also to transparency of the data, the system’s capabilities and AI business models as well as to explainable AI.

On the other hand, we need a legal framework. This includes data protection laws, where we need to clarify: What is the scope and efficiency of information obligations? What are the specific obligations for automated decision-making systems about the “logic involved”? And it includes fair trading and consumer protection laws as well, since data-driven marketing tools are shaping decision-making architectures, thus influencing consumers’ decisions, which raises the question on what is the legal regulation of subliminal marketing practices?

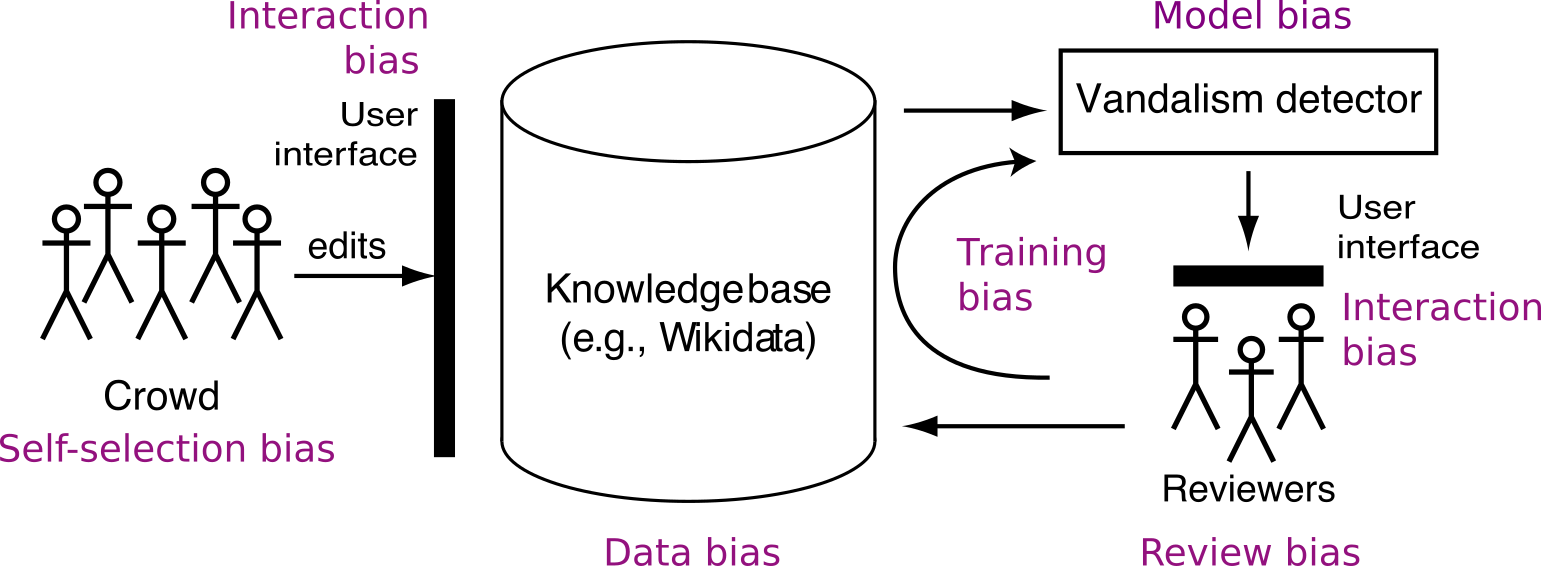

Bias Analytics: Machine Learning and Minority Protection (Jun.-Prof. Martin Potthast)

This project studies the analysis and the management of bias in sociotechnical systems that employ Machine Learning as a tool.

Case Study 1: Vandalism on Wikidata

The damage control system of Wikidata is biased against anonymous editors and newcomers.

Case Study 2: The Direct Answer Dilemma

- Conversational search interfaces are “narrow”

- Users are impatient

- There is only room for one or two search results

- Questions need to be answered directly

- Direct answers

- A direct answer is not necessarily correct

- Users still presume correctness

- This presents a strong bias against diversity

- The dilemma: fast answers vs. accurate ones