Cooperations

Version Control for Environmental Modelling Data

Background

The Department of Environmental Informatics of the UFZ develops software for the simulation of environmental phenomena via coupled thermal, hydrological, mechanical and chemical processes by using innovative, numerical methods. Examples include:

- the prediction of groundwater contamination,

- the development of water management schemes, or

- the simulation of innovative means of energy storage.

The modelling process is a complete workflow, starting with data acquisition and -integration to process simulation to analysis and visualization of calculated results.

This modelling process is not transparent and traceable and often poorly documented. A typical model is developed over many weeks or months and usually a large number of revisions are necessary for updating and refining the model such that the simulation is as exact as possible. The first setup of a model is often used to get an overview over existing data and to detect potential problems in both data and numerical requirements.

Further revisions try to solve these problems by adding data, refining or adjusting finite element meshes or updating and adjusting processes and their parametrization. Both input data and parameter files range from few / small up to hundreds of files containing detailed spatial, temporal or numerical information. Likewise, changes from one modelling step to the next may be small (e.g. one parameter value in a single input file) or major (e.g. geometrical input changes and requires a new discretization of the FEM domain as well as a new parameterization).

Currently, all required files are stored locally on the laptop of each scientist. An overview over the evolution of a model is easily lost and it is difficult to trace the order and nature of previous changes. Collaborative work on a single model is difficult. An enormous deficiency of the current solution is that there is no implicit documentation of the changes and each user stores a log of such changes on their own laptop at their discretion.

Objectives

In this cooperation, we develop a solution that circumvents those impediments by introducing a uniform, central and consistent storage of the individual revisions, such that each scientist

- has access to the simulation data they are entitled to

- has a backup if local data is lost or corrupted

- has the possibility to automatically track, analyse and evaluate the changes in each step of the modelling process

Preliminary results

A preliminary prototype for a version control system tailored to the requirements of environmental modelling has been developed, employing KITDM (Karlsruhe Institute of Technology Data Manager) as the software architecture for building up repositories for research data. It provides a modular and extensible framework to adapt to the needs of various scientific communities and use cases and employs established standards and standard technologies. The prototype provides adjustable data storage and data organization, easy to use interfaces, high performance data transfer, a flexible role-based security model for easy sharing of data and offers flexible meta-data indexing and searching functionality. It is seamlessly integrated in MASI (Meta-data Management for Applied Sciences), a current research project which aims at establishing a generic meta-data management for scientific data with research focusing on generic description of meta-data, generic backup and recovery strategies based on meta-data management and enhanced subsequent processing of data.

For the storage of environmental model revisions, we employ an efficient and “disk saving” storage strategy such that specific parameter files are stored only if their content has actually been changed in the latest revision (selective upload strategy). The model development is stored in a tree structure, each node (revision) has a unique link to its predecessor (i.e. the revision from which it has been derived). Due to the tree structure, some nodes (revisions) may become irrelevant if a certain modelling approach has been rejected and development is progressed from a previous iteration.

Meta data for a modelling project (e.g. the name of the project, the researcher in charge, modifications to a certain file in a selected revision, or the tree structure of all revisions) are stored in a meta-data file and thus contain a basic documentation which is automatically generated during the development of a model. Additional meta-data files can be generated as needed by the scientists developing the model. In this way, a preliminary documentation regarding the development of the model is created at the initial set-up of a model and complemented after each revision.

A correspondent download strategy to the „selective upload“ is used, such that the for a specific revision the latest version of all relevant files are downloaded, such that the user always has a complete model available after download.

Team

- Dr. Martin Zinner

- Dr. Karsten Rink

- Dr. Thomas Fischer

- Dr. René Jäkel

Publications

Zinner M, Rink K, Jäkel R, Feldhoff K, Grunzke R, Fischer T, Song R, Walther M, Jejkal T, Kolditz O, Nagel W E: Automatic Documentation of the Development of Numerical Models for Scientific Applications using Specific Revision Control. In Proc of the Twelfth International Conference on Software Engineering Advances, 2017.

ScaDS Research Cluster Management

The high diversity of Big Data researchers’ requirements around ScaDS Dresden/Leipzig is not easily mapped to the many different computing resources available. Research in the project ScaDS Dresden/Leipzig spans a very broad area of topics and fields and the corresponding expected IT infrastructure comprises a wide variety as well. The Apache Hadoop stack is quite common in the Big Data field but not the only one needed and even the Apache Hadoop stack can be required in many variations.

Variety of researchers requirements

ScaDS Dresden/Leipzig is working in many different research areas, this includes the disciplinary research areas of ScaDS Dresden/Leipzig and the computer science. Here are only some examples of the Infrastructure requirements mentioned by researchers so far:

- Schedulers: Apache Hadoop stack (several specific versions) , SLURM, separate cluster of computers

- Software: Apache Zookeeper, Apache HBase, KNIME, research area specific software, …

- Environment: Java (several specific versions), Docker, specific Linux OS, …

- Storage: Apache HDFS, Network storage, SSD, …

Of course researchers want to provide good documentation of their work, so topics like workflow management and provenance for their results are important to them.

Hardware resources in the environment of ScaDS Dresden/Leipzig

High-Performance-Computing (HPC)

- Taurus (ZIH Dresden)

Shared-Nothing-Cluster

- Galaxy Dresden/Leipzig (URZ Leipzig)

- BDCLU (Ifi Leipzig)

- Some cluster at the database department Leipzig

- Small temporary cluster of desktop computers

Virtual Machines

- ZIH Dresden

- URZ Leipzig

In-Memory-Server

- ZIH Dresden

- Sirius (in installation) (URZ Leipzig)

Problem outline

Even with this broad range of resources available, developing an approach to map requirements to existing or to be developed infrastructure offers is an interesting research topic. Let’s outline this using the scheduling of a Shared-Nothing-Cluster as an example:

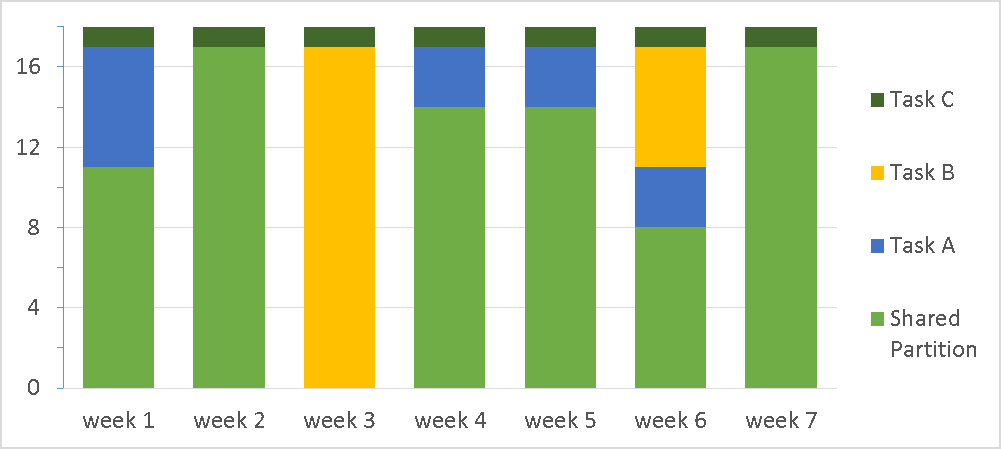

When focusing on the shared nothing infrastructure referred to often in the Big Data area, one gets problems with software configuration management and data locality. A straight forward approach to enable several different cluster configurations on a set of resources is to build temporal subsets (called for example partitions or sub clusters) and configure them according to the specific need.

The problem is to keep the different configurations manageable and reproducible. At the same time it is important to keep the overhead low to enable researchers to get reliable performance results. One possible building block for a solution we are investigating is the usage of lightweight Linux containers like the prominent example Docker. Linux container offer near hardware performance and encapsulate the individual configuration and software stack needed.

InnOPlan

This research project is a cooperation of several industrial partners (KARL STORZ, smartIT, HBT) and research institutes (Leipzig University, University of Hohenheim, University Hospital Heidelberg) for investigating innovative approaches to improve business processes in surgical environments in hospitals.

Background

The surgery area is the heart of each hospital but also the most expensive part, e.g. each operating room costs 1000€ – 3000€ per hour. As in the whole healthcare area there is an ever increasing cost pressure and every gain in efficiency has to be utilized. Additionally, in the highly dynamic and complex operating room area with manifold physicians, staffs and resources many tasks are not supported with information technology. Further the integration on an information or communication level is quite poor.

Objectives

Instead of isolated processes and applications in the operating room area with a wide range of unused data sources our solution approach is to provide efficient surgical processes by smart data services. The research goal is to develop a data-driven way to link and optimize processes and tasks in the operating room area. Besides existing data sources, e.g. hospital information system, a main focus is to integrate data of medical devices, because they allow to get real-time insights into running processes and tasks. Hence a smart data platform is developed, which connects all related data sources and additionally provide solutions for accessing raw data as well as analysed information. Based on this information, preceding and following processes can be integrated. Furthermore, the monitoring and management of the operating room area can be improved and some surgical tasks can be automated.

Preliminary results

The ScaDS Dresden/Leipzig competence center supported InnOPlan with expertise in Big Data architectures and consultancy for using the Apache Hadoop ecosystem. Thanks to this a Big Data framework as a part of the smart data platform was developed. This framework first allows scalable processing of large medical device data in real-time as well as for getting insights in live and historic data by Big Data analytics. For more information visit the project website.

Team

- Norman Spangenberg

Golden Genome Project

Background

In the last years many new genomes are sequenced. So in the field of genomics and transcriptomics the amount of data is getting more and more. This gives great possibilities for the analysis of one species. However, it also gives possibilities to compare two or more species which each other. This comparison is based on the sequences but this is only the starting point. In biology not all mutations can be seen by a local comparison of two sequences. So the solution is to get more information out of the context. Where the context is the surrounding sequences and also other species with sequences that have the same origin. By using this information, a common coordinate system can be created. The comparisons between species are easily possible with this system.

Objectives

In this project, we aim to establish a tool to create a common coordinate system out of a given genome alignment. This tool is using graphs as backbone data structure. These graphs have two advantages: on one hand graph rewriting can be used to reduce the complexity of the problem and on the other hand they give possibilities to get different views on this common coordinate system. The graphs grow fast in size so that scalable Big Data technologies are used to ensure that the system can work on mostly every input size.

Preliminary results

The theory is on the way of publication and a Prototype is in construction where:

- Parsing and filtering are implemented

- A good fitting graph data structure is used

- Efficient rewrite rules are identified on the graphs

- Results can be created

Team

ScaDS Dresden/Leipzig

- Fabian Externbrink

- Falco Kirchner (Student)

Bioinformatics

- Dr. Lydia Müller

- Dr. Christian Höner zu Siederdissen

- Prof. Peter F. Stadler

Collaboration partners

Dr. Michael Hiller (MPI-CBG & MPI-PKS)

Integrating Canonical Text Service Support in the CLARIN Infrastructure

This joint effort of ScaDS Dresden/Leipzig and CLARIN Leipzig resulted in a connection between the Canonical Text Service (CTS) and the Common Language Resources and Technology Infrastructure (CLARIN) that provides a fine-granular reference system for CLARIN and opens its infrastructure for many Digital Humanists across the world.

Background

CLARIN is an European research infrastructure project with the goal to provide a huge interoperable research environment for researchers and is, for example, described in detail in the german article „Was sind IT-basierte Forschungsinfrastrukturen fuer die Geistes- und Sozialwissenschaften und wie koennen sie genutzt werden?“ by Gerhard Heyer, Thomas Eckart and Dirk Goldhahn. Generally, CLARIN combines the efforts of various research groups to build an interoperable set of tools, data sets and web based workflows.

This interoperability for example enables facetted document search over several different servers of different research groups in the Virtual Language Observatory VLO. Some of the more philosophic aspects of the CTS protocol are implied by its self purpose in Digital Humanities that is for example discussed in the according blog post. More technical benefits are a flexible granularity of the references, its highly specialized performant implementation and its position as an address to outsource text content.

Objectives

By adding CTS support in CLARIN, these technical benefits (especially the finer granularity) can directly address some of the issues that are listed by Thomas Eckart in the paper „Jochen Tiepmar, Thomas Eckart, Dirk Goldhahn und Christoph Kuras: Canonical Text Services in CLARIN – Reaching out to the Digital Classics and beyond. In: CLARIN Annual Conference 2016, 2016“

- Many of the current solutions treat textual resources as atomic, i.e. all provided interfaces are focused on the complete resource. The inherent structure of textual data is left to be processed by external tools or manually extracted by the user. Although this being acceptable for some use cases, a highly integrated research environment loses much of its power and applicability for research questions if ignoring this obvious fact.

- Textual resources do not have a typical granularity. Even for rather similar textual resources (like web-based corpora or document-centric collections) it can not be assumed to have a ”default structure” on which analysis or resource aggregation can take place. As a consequence many approaches require and assume a standard format that is foundation for all provided applications and interfaces.

- Granularity has to be addressed as a basic feature of (almost) all textual resources. Current infrastructures make usage of several identification and resolving systems (like Handle, DOI, URNs etc.) but a fine-grained identification and retrieval of (almost) arbitrary parts are hardly supported or have to be artificially modelled using features that these systems provide. As a consequence even textual resources already provided in CLARIN are often not directly accessible or combinable because of the heterogeneity of used reference solutions or the level of supported granularity.

Preliminary results

The result of this work is that CTS URNs for a configurable type of text part are now included in the Virtual Language Observatory together with a import interface for CTS instances. After a very well received presentation at the CLARIN Annual Conference 2016 in Aix-en-Provence, several discussions about a further inclusion and various implications of CTS as a (internally used) text communication protocol have started, that also indicated that – even though this work already provides significant benefits for many researchers – this first step has to be considered as just the tip of the iceberg. Future work will include an interface between the Federated Content Search of CLARIN and the fulltext search of CTS as well as the connection to WebLicht via a MIME-Type based interface.

Mass Spectrometer Data Management @ UFZ

The research project “Management and Processing of Mass Spectrometry Data” is a collaborative project between the Big Data center ScaDS Dresden/Leipzig and the Department of Analytical Chemistry at Helmholtz Centre for Environmental Research (UFZ).

Background

The Helmholtz Centre for Environmental Research (UFZ) investigates the interaction between environment and humans. One focus is the fate of chemicals that are released to the environment with unknown effects for humans and the ecosystem. However, chemical substances outside the laboratory never occur isolated but mix with a background of naturally occurring molecules. This natural organic matter is one of the most complex mixtures of chemical substances and found almost anywhere on the planet.

Currently at the UFZ, state-of-the art analytical tools (e.g. ultra-high resolution mass spectrometry) are used to characterize naturally occurring molecules with high accuracy and precision. Tens of thousands molecules can be detected in each sample and chemical formulas be calculated from precise mass measurements and known masses of atoms. Therefore, data sets produced by these instruments are large, structurally complex, and intrinsically connected by chemical rules and measurement parameters.

Objectives

In this joint project of ScaDS Dresden/Leipzig and the UFZ Department of Analytical Chemistry we aim at developing a Big Data integration and analysis pipeline to facilitate the handling of these expansive data sets. For instance, manual steps in such complex workflows are prone to error, impair reproducibility and limit scalability. We therefore build a flexible end-to-end analytics platform to process, manage and analyze mass spectrometry data of complex mixtures of molecules based on the data analytics, reporting and integration platform KNIME, an Oracle database and the statistical programming language R. We use these powerful tools to implement workflows covering efficient data evaluation algorithms as well as novel visualizations of mass spectrometry data.

Team

ScaDS Dresden/Leipzig

- Dr. Anika Groß

- Dr. Eric Peukert

- Kevin Jakob (Student)

Helmholtz-Zentrum für Umweltforschung UFZ

- Dr. Oliver Lechtenfeld

Preliminary results

- Initial Automation of manual steps with KNIME

- Data management for mass spectrometry data

- Parallelization of sum formula computation

- Automatic extraction of metadata

Outlook

- Automated fast validation of measurements

- Create a service offering at UFZ for many mass spectrometry users