May 8, 2025

KI Netzwerktreffen: Bridging Technology, Bias, and Human Involvement

In the ongoing effort of ScaDS.AI Dresden/Leipzig to explore the complex interplay between technology and society, the KI Netzwerktreffen on ethical AI have proven to be a good platform for critical dialogues. Building on the great interest shown the last time, our latest session delved deeper into the challenges and opportunities of fair and inclusive AI development.

Recap of Our Previous Meeting (February 27, 2025)

At the end of February, Leipzig University hosted the first KI-Netzwerktreffen at our Living Lab in Leipzig. Educators, researchers, and students gathered to reflect on AI in a bi-monthly hybrid meeting. Key highlights included:

Innovative AI Applications: Participants experienced the interactive Magic Mirror demonstrator, which enabled real-time image transformation experiments.

Generative AI Simplified: Matthias Täschner (ScaDS.AI Dresden/Leipzig – Service & Transfer, IT-Coordination) clearly explained the principles behind generative AI.

Critical Reflections: Vanessa Kuhfs (ScaDS.AI Dresden/Leipzig – Design Research for GenAI & HCI) energized the audience with her talk “Who Designs For Whom?” that inspected how power dynamics, gender bias, and ethics shape AI systems.

For those interested in revisiting the full details of that event, please check out our complete recap here.

Furthermore, the spirited and insightful debates from February—which centered on clickwork, data biases, and the reflection of societal values in AI—demonstrated that these issues run deep. Recognizing that these topics are foundational rather than superficial, the April session is designed to deepen the discussion and explore potential pathways for advancement.

April Session: Deepening the Inquiry

Initially, Doreen Klein of the E-Learning team invited everyone to join a brief survey. She encouraged attendees to share their institutional affiliations—specifying their university and academic department—as well as to describe their initial associations with artificial intelligence.

Next, Johannes Häfner (ScaDS.AI Dresden/Leipzig – Living Lab, Service & Transfer) provided a concise overview of ScaDS.AI Dresden/Leipzig. He emphasized our commitment to bridging innovative research with practical applications in technology transfer and education. Moreover, he highlighted our role in hosting events that promote knowledge exchange and interdisciplinary collaboration. Directing participants to visit the ScaDS.AI homepage—especially the Events and Transfer and Service section—for more details.

Revisiting “Who Designs For Whom?”

Building on the earlier momentum, the April meeting offered an extended platform for rigorous examination of key issues. For a brief insight, we now share a 2‑minute video recording of Vanessa Kuhfs’ talk (originally delivered in German).

Vanessa Kuhfs returned to expand on her earlier examination by addressing several pivotal points:

The Locus of Innovation:

The talk examined which entities are steering technological progress. We discussed how a concentration of design power—often within a limited group of organizations—significantly influences both the direction of technological innovation and its broader social impact. The analysis prompted critical reflection on whether the current locus of innovation truly reflects the diverse needs of society.

Cultural and Gender Dynamics:

Moreover, the earlier critique, Vanessa Kuhfs provides a rigorous evaluation of how design processes are frequently dominated by Western, male-centric paradigms. It demonstrated that these biases are not confined to technical aspects alone but extend into the ethical and cultural dimensions of AI. By referencing historical examples and recent studies, she illustrated how such imbalances can result in technologies that are less inclusive and less globally adaptable.

Ethical Imperatives:

In addition, a compelling call for integrating ethical considerations into the heart of technology design was made. Arguing that beyond technical proficiency, a more inclusive and socially responsible design approach is necessary—one that incorporates interdisciplinary insights and actively addresses issues of equity and accountability. Indeed, the remarks underscored the urgency of rethinking design processes to better serve a diverse, global community.

Investigating Clickwork and Data Quality

Provided that a substantial segment of the April session was devoted to a detailed exploration of the “click, label, repeat” process. This discussion addressed multiple dimensions:

Human-in-the-Loop Considerations:

Participants learned how routine digital interactions—ranging from reCAPTCHA challenges to image categorization on social media platforms—serve as the backbone of AI refinement. Although these tasks may appear mundane, they are essential for enhancing the accuracy and learning capabilities of AI systems. The session emphasized that such human involvement is indispensable to the continuous improvement of technology.

Data Integrity and Bias:

Reinforcing the well-known adage “garbage in, garbage out,” the conversation highlighted that integrity and quality of AI output is intrinsically tied to the input data. Moreover, the discussion expanded to consider how biases embedded within data—often as a consequence of hurried or unregulated clickwork—can result in skewed and unreliable AI outcomes.

Ethical Dilemmas in Microtask Labor:

A critical examination of the “click, label, repeat” model revealed underlying ethical concerns. Panelists discussed how microtask labor, while cost-effective for refining AI systems, can also lead to the exploitation of workers operating under precarious conditions. The dialogue explored potential pathways for mitigating these risks, including the implementation of standardized quality controls, increased transparency in data annotation processes, and the establishment of fair labor practices to ensure respect for and adequate compensation of these contributors.

The Discrimination Cycle:

In addition, the session also addressed the concept of the discrimination cycle—a self-reinforcing loop whereby biased input data leads to skewed AI outputs that, in turn, validate and amplify the originating biases. This cycle perpetuates discrimination by influencing subsequent data collection and decision-making processes. It was emphasized that breaking this cycle requires concerted efforts, such as integrating diverse data sources, establishing robust ethical guidelines for data curation, and consistently monitoring AI outcomes to ensure fairness and accountability.

Group Discussions and Future Directions

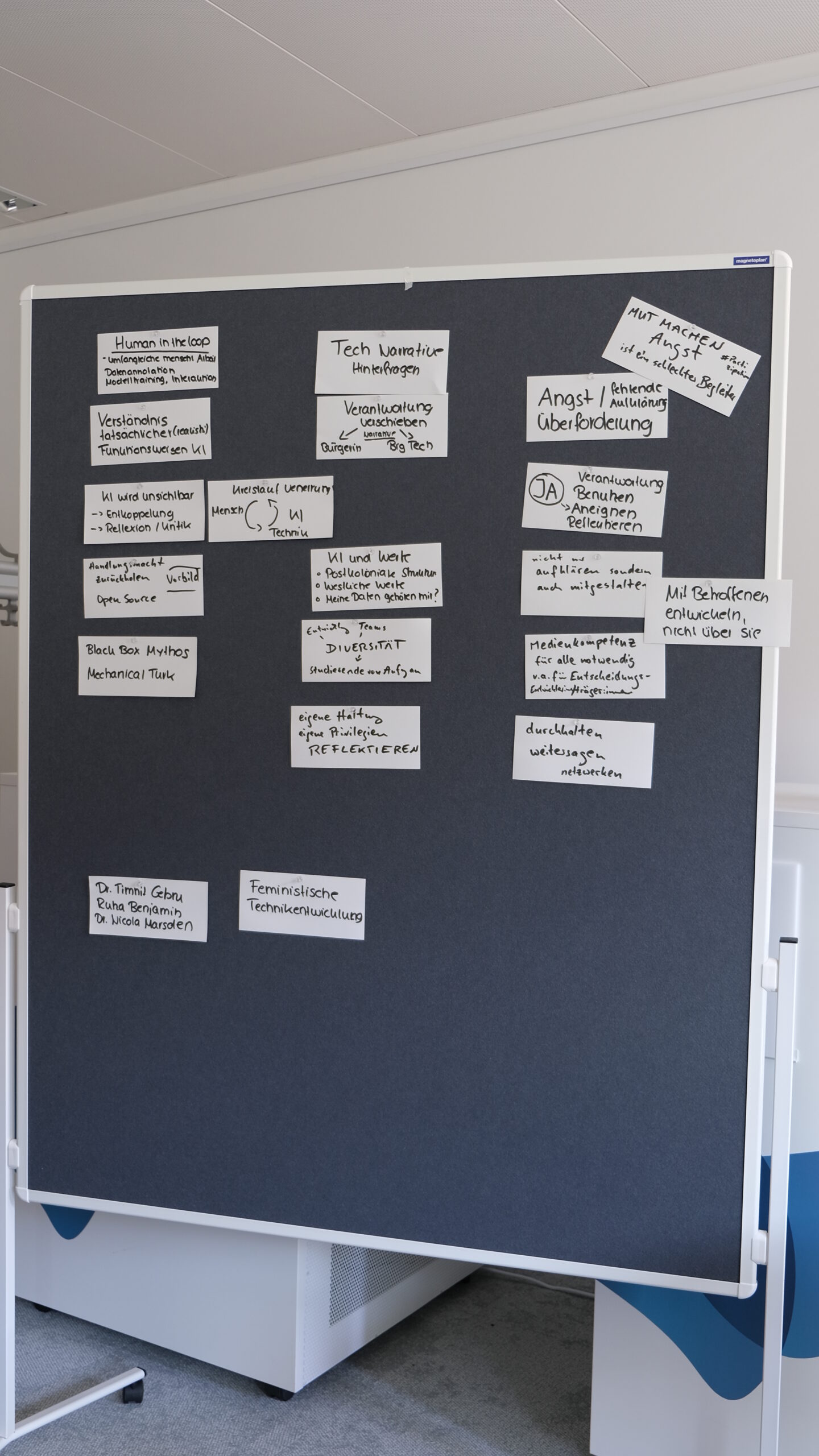

Subsequent breakout sessions provided participants with the opportunity to debate further and develop actionable insights on several key inquiries:

- Disadvantage in Academia and Practice: Identification of specific junctures within the educational and professional environments where AI applications inadvertently propagate inequities

- Mitigation Strategies: Consideration of practical frameworks and policy measures to counteract the adverse effects of data biases

- Charting New Research Avenues: Recognition of unresolved issues and potential interdisciplinary research endeavors designed to foster more responsible AI development

- Assessing Long-Term Societal Implications: How systemic biases in AI could exacerbate socio-economic disparities over time, advocating for mechanisms to regularly monitor and adjust AI systems

- Formulating Best Practice Guidelines: Developing actionable recommendations and guidelines to encourage ethical AI design and responsible data curation in both academic and practical settings

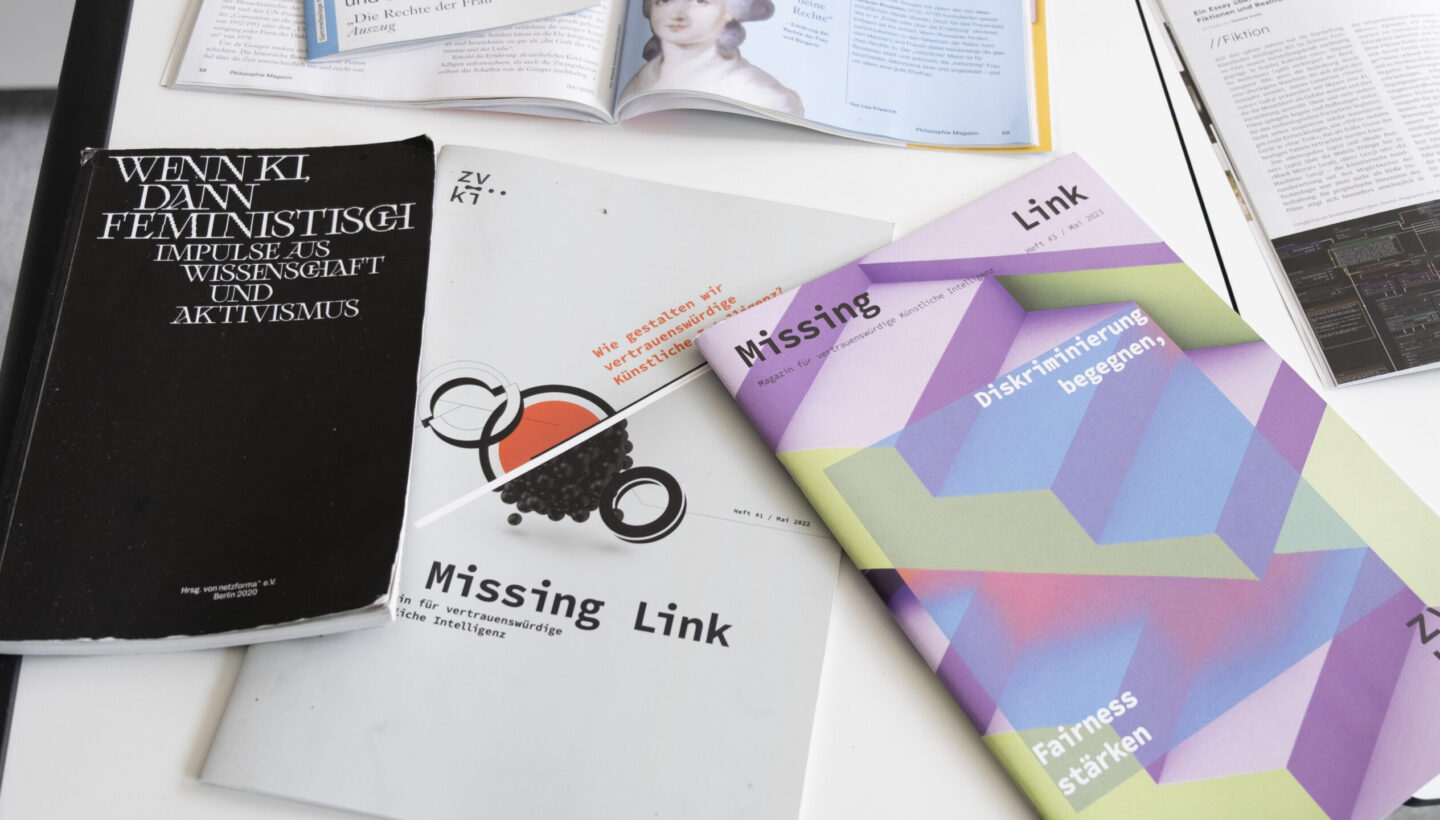

Literature Table

Additionally, a literature table was arranged, allowing participants to browse the referenced books and magazines. This provided a valuable opportunity to enter conversation and expand research with seminal texts and contemporary debates underpinning the ethical and societal dimensions of artificial intelligence.

Final Reflections and the Path Forward

The progression from the February session to the April meeting underscores the critical role that informed debate plays in advancing our understanding of artificial intelligence. The discussions on clickwork, data biases, and the societal implications of AI have confirmed the need for further research and collaborative exploration.

At ScaDS.AI Dresden/Leipzig, we remain dedicated to fostering an environment that not only scrutinizes the technical dimensions of AI but also places ethical and social considerations at the forefront. As we look ahead, we invite engagement from academic institutions, industry experts, and policymakers to collectively address these challenges and advance the development of equitable and transparent AI systems.

Further Considerations:

- How might interdisciplinary collaborations further refine the ethical landscape of AI development?

- What regulatory frameworks and institutional policies could support the fair treatment of microtask labor?

- In what ways can the open-source community contribute to dismantling opaque “black-box” methodologies in AI?

We welcome continued dialogue and collaboration as we navigate these profound issues and work together toward an AI-enhanced future that reflects diverse societal values.

For more information and to access past event results and presentation slides, visit the KI-Netzwerktreffen TaskCards.