July 29, 2025

ScaDS.AI Dresden/Leipzig at ICML 2025 in Vancouver, Canada

From July 13 to 19, the International Conference on Machine Learning (ICML) took place in Vancouver. The ICML is the premier gathering of professionals dedicated to the advancement of the branch of Artificial Intelligence (AI) known as Machine Learning and one of the fastest-growing conferences on AI worldwide. It is globally renowned for presenting and publishing cutting-edge research on all aspects of machine learning used in closely related areas like artificial intelligence, statistics and data science. As well as in important application areas such as machine vision, computational biology, speech recognition, and robotics.

The ICML 2025 offered a fascinating cross-section of machine learning research, with an especially strong emphasis on the current challenges of training large language models (LLMs). Participants came from a wide variety of fields, including academic and industrial researchers, entrepreneurs and engineers, as well as doctoral and postdoctoral students.

Our Accepted Contributions

ScadsAI Dresden/Leipzig participated in the conference with a wide range of contributions. Here is an overview of the titles, authors and abstracts:

A Sub-Problem Quantum Alternating Operator Ansatz for Correlation Clustering

Authors:

Lucas Fabian Naumann, Jannik Irmai, Bjoern Andres

Abstract:

The Quantum Alternating Operator Ansatz (QAOA) is a hybrid quantum-classical variational algorithm for approximately solving combinatorial optimization problems on Noisy IntermediateScale Quantum (NISQ) devices. Although it has been successfully applied to a variety of problems, there is only limited work on correlation clustering due to the difficulty of modelling the problem constraints with the ansatz.

Motivated by this, we present a generalization of QAOA that is more suitable for this problem. In particular, we modify QAOA in two ways: Firstly, we use nucleus sampling for the computation of the expected cost. Secondly, we split the problem into sub-problems, solving each individually with QAOA. We call this generalization the Sub-Problem Quantum Alternating Operator Ansatz (SQAOA) and show theoretically that optimal solutions to correlation clustering instances can be obtained with certainty when the depth of the ansatz tends to infinity. Further, we show experimentally that SQAOA achieves better approximation ratios than QAOA for correlation clustering, while using only one qubit per node of the respective problem instance and reducing the runtime (of simulations).

Implicit Language Models are RNNs: Balancing Parallelization and Expressivity

Authors:

Mark Schöne, Babak Rahmani, Heiner Kremer, Fabian Falck, Hitesh Ballani, Jannes Gladrow

Abstract:

State-space models (SSMs) and transformers dominate the language modeling landscape. However, they are constrained to a lower computational complexity than classical recurrent neural networks (RNNs), limiting their expressivity. In contrast, RNNs lack parallelization during training, raising fundamental questions about the trade off between parallelization and expressivity. We propose implicit SSMs, which iterate a transformation until convergence to a fixed point.

Theoretically, we show that implicit SSMs implement the non-linear state-transitions of RNNs. Empirically, we find that only approximate fixed-point convergence suffices, enabling the design of a scalable training curriculum that largely retains parallelization, with full convergence required only for a small subset of tokens. Our approach demonstrates superior state-tracking capabilities on regular languages, surpassing transformers and SSMs. We further scale implicit SSMs to natural language reasoning tasks and pretraining of large-scale language models up to 1.3B parameters on 207B tokens – representing, to our knowledge, the largest implicit model trained to date. Notably, our implicit models outperform their explicit counterparts on standard benchmarks.

GreeDy and CoDy: Counterfactual Explainer for Dynamic Graphs

Authors:

Zhan Qu, Daniel Gomm, Michael Färber

Abstract:

Temporal Graph Neural Networks (TGNNs) are widely used to model dynamic systems where relationships and features evolve over time. Although TGNNs demonstrate strong predictive capabilities in these domains, their complex architectures pose significant challenges for explainability. Counterfactual explanation methods provide a promising solution by illustrating how modifications to input graphs can influence model predictions. To address this challenge, we present CoDy—Counterfactual Explainer for Dynamic Graphs—a model-agnostic, instance-level explanation approach that identifies counterfactual subgraphs to interpret TGNN predictions. CoDy employs a search algorithm that combines Monte Carlo Tree Search with heuristic selection policies, efficiently exploring a vast search space of potential explanatory subgraphs by leveraging spatial, temporal, and local gradient information. Extensive experiments against state-of-the-art factual and counterfactual baselines demonstrate CoDy’s effectiveness, achieving average scores up to 6.11 times higher than the baselines.

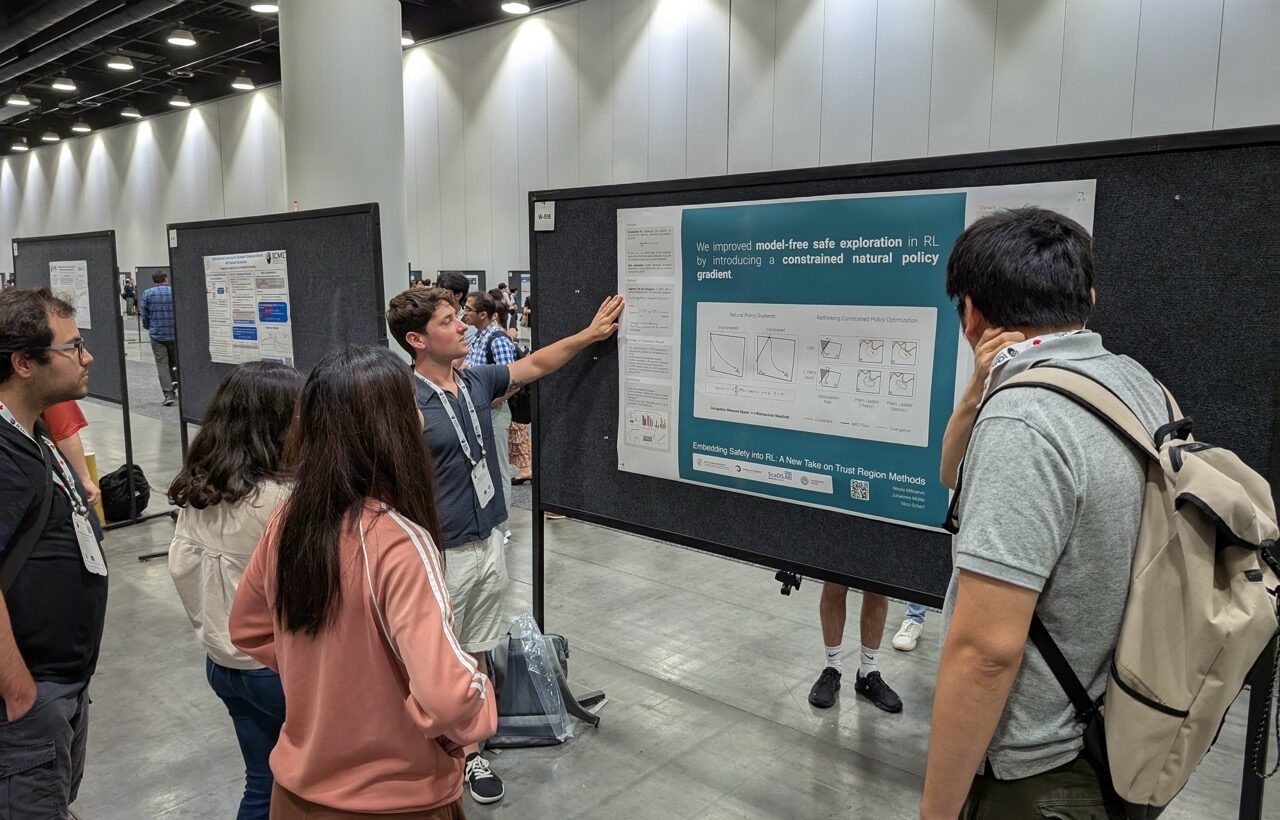

Embedding Safety into RL: A New Take on Trust Region Methods

Authors:

Nikola Milosevic, Johannes Müller, Nico Scherf

Abstract:

Reinforcement learning (RL) is a type of AI that learns by trial and error, often achieving impressive results in games, robotics, and other tasks that require reasoning in multiple steps. But this trial-and-error process can lead to unsafe behavior while the system is still learning—like breaking rules or taking risky actions.

Our work introduces a new method called Constrained Trust Region Policy Optimization (C-TRPO) that helps RL systems stay safe while learning without making any specific assumptions about the task. Instead of allowing the system to explore freely and hoping it stays within limits, C-TRPO carefully guides the learning process so that all new behaviors are safe by design. This means it avoids unsafe actions not just at the end, but throughout training. We also show how our method connects to other popular approaches and test it on several tasks. The results show that C-TRPO keeps the system within safety limits while still performing well.

Central Path Proximal Policy Optimization

Authors:

Nikola Milosevic, Johannes Müller, Nico Scherf

Abstract:

In constrained Markov decision processes, enforcing constraints during training is often thought of as decreasing the final return. Recently, it was shown that constraints can be incorporated directly in the policy geometry, yielding an optimization trajectory close to the central path of a barrier method, which does not compromise final return. Building on this idea, we introduce Central Path Proximal Policy Optimization (C3PO), a simple modification of PPO that produces policy iterates, which stay close to the central path of the constrained optimization problem. Compared to existing on-policy methods, C3PO delivers improved performance with tighter constraint enforcement, suggesting that central path-guided updates offer a promising direction for constrained policy optimization.

Hyperband-based Bayesian Optimization for Black-box Prompt Selection

Authors:

Lennart Schneider, Marin Wistuba, Aaron Klein, Jacek Golebiowski, Giovanni Zappella, Felice Antonio Merra

Abstract:

Optimal prompt selection is crucial for maximizing large language model (LLM) performance on downstream tasks. As the most powerful models are proprietary and can only be invoked via an API, users often manually refine prompts in a black-box setting by adjusting instructions and few-shot examples until they achieve good performance as measured on a validation set. Recent methods addressing static black-box prompt selection face significant limitations: They often fail to leverage the inherent structure of prompts, treating instructions and few-shot exemplars as a single block of text. Moreover, they often lack query-efficiency by evaluating prompts on all validation instances, or risk sub-optimal selection of a prompt by using random subsets of validation instances.

We introduce HbBoPs, a novel Hyperband-based Bayesian optimization method for black-box prompt selection addressing these key limitations. Our approach combines a structural-aware deep kernel Gaussian Process to model prompt performance with Hyperband as a multi-fidelity scheduler to select the number of validation instances for prompt evaluations. The structural-aware modeling approach utilizes separate embeddings for instructions and few-shot exemplars, enhancing the surrogate model’s ability to capture prompt performance and predict which prompt to evaluate next in a sample-efficient manner. Together with Hyperband as a multi-fidelity scheduler we further enable query-efficiency by adaptively allocating resources across different fidelity levels, keeping the total number of validation instances prompts are evaluated on low. Extensive evaluation across ten benchmarks and three LLMs demonstrate that HbBoPs outperforms state-of-the-art methods.

Diverse contributions on a large scale

In addition to our own contributions and papers, there was much more to discover. In total there were over 3,000 papers, 33 workshops, 11 tutorials, 28 expos, and much more. The invited talks covered topics such as copyright law in the application of AI, the question of how societies have historically measured public preferences and moral norms.